Housekeeping & Recent Content:

- A detailed overview of Rubrik’s business and stock will be published soon.

- My current portfolio & performance vs. the S&P 500 can be found here. I exited a position this week, trimmed another and added to some holdings.

- Access to 40+ earnings reviews from the past season here.

- If you haven’t already, please mark this email address as a safe sender. It is greatly appreciated and will help a ton with deliverability as we navigate the platform migration.

Table of Contents:

- Micron – Earnings Review

- Nvidia – Investment

- Meta – Milestone & Gemini Models

- Comparable Valuation Tables

- Starbucks – Changes

- MongoDB – Investor Day/Business Review

- PayPal – Getting Leaner

- Amazon – Cloud Growth

- Flutter & DraftKings – News York Data

- Mercado Libre – Argentina

- Uber – Non-Restaurant Delivery Business Update

- Headlines

- Macro

1. Micron (MU) – Earnings Review

a. Micron 101

Micron sells semiconductors for memory and storage. Its “Not And” (NAND) chips offer non-volatile data storage, which maintains stored information when a system’s power is turned off. Separately, its Dynamic Random Access Memory (DRAM) chips offer volatile memory storage for personal computers, data centers, and mobile devices. Volatile means that storage isn’t maintained when a system’s power is turned off. DRAM helps processors access real-time data to minimize processing latency. These chips are considered to be commoditized at this point, with Micron’s cost advantages providing its edge.

- DRAM is great for short-term memory storage and rapid access.

- NAND is great for longer-term memory storage and use cases that don’t need the lowest data processing latency.

These chips provide the foundation for its solid-state drives (SSDs), which are used in computer data storage and things like USB flash drives. Micron sells standalone NAND chips and also SSDs with NAND chips in them. SSDs replace hard disk drives (HDDs), as they’re more power efficient, durable and resilient. It provides basic memory cards for things like gaming devices and cameras as well.

Perhaps most interestingly, Micron offers a type of DRAM called high-bandwidth memory (HBM) used to enable the massive data needs of GenAI. It sharply improves data processing capabilities and facilitates improved data sharing between CPUs & GPUs. Nvidia is a big customer, using Micron’s HBM in its Blackwell and future Rubin systems. It also offers higher-capacity SSDs to help with LLM storage.

b. Key Points

As we work through this piece, keep in mind that Micron is a hyper-cyclical business. Demand fluctuates violently with changes in the macro environment. Margins do too, as pricing & utilization rates can experience hefty swings.

Micron pre-announced Q4 results with updated guidance in excess of its original forecast. The data below is based on those higher new targets, which analyst estimates adjusted for.

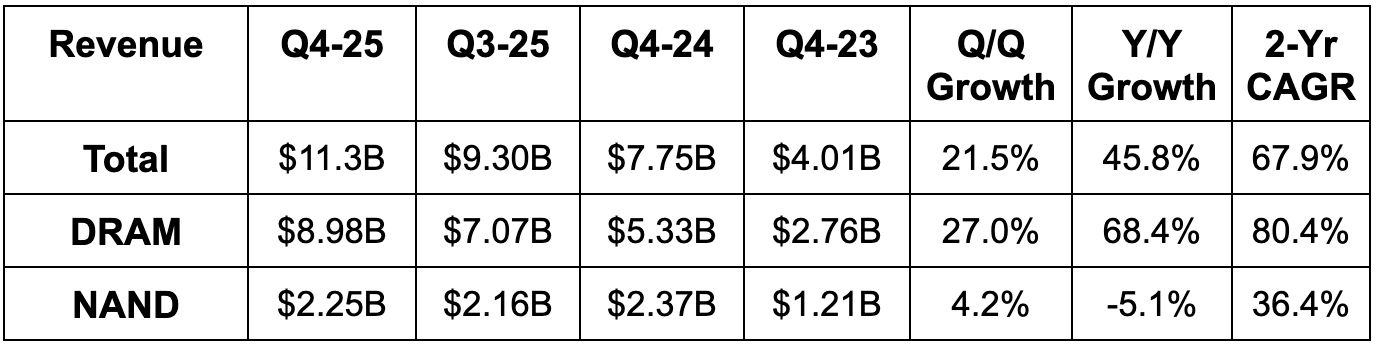

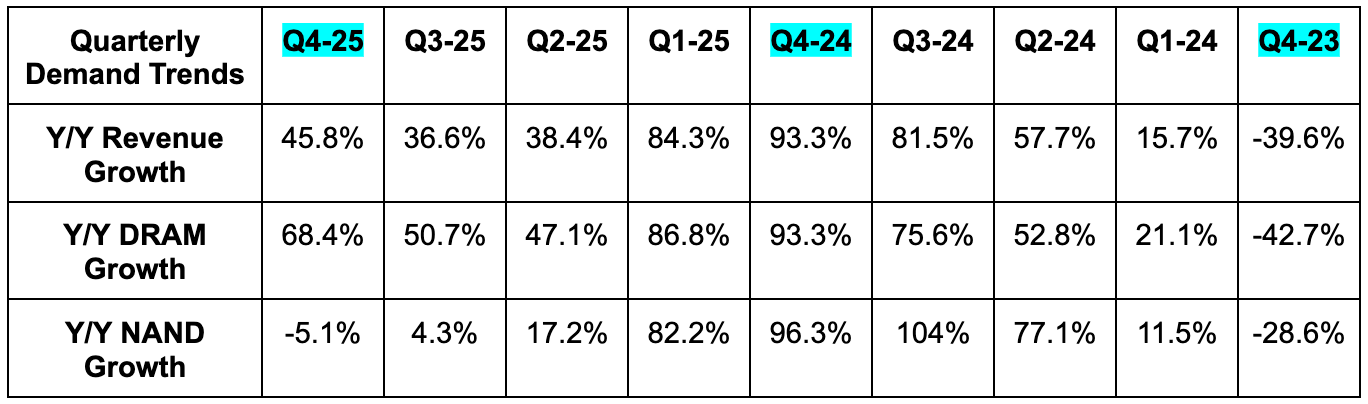

c. Demand

- Micron beat revenue estimates by 1.4% & beat guidance by 1.1%.

- In DRAM, bit shipments rose by about 13% Q/Q vs. 20%+ Q/Q growth last quarter. Average Selling Price (ASP) rose by about 11% Q/Q vs. about -2% Q/Q growth last quarter.

- In NAND, bit shipments fell by about 5% Q/Q vs. 25% Q/Q growth last quarter. ASP rose by about 8% Q/Q vs. about -8% Q/Q growth last quarter.

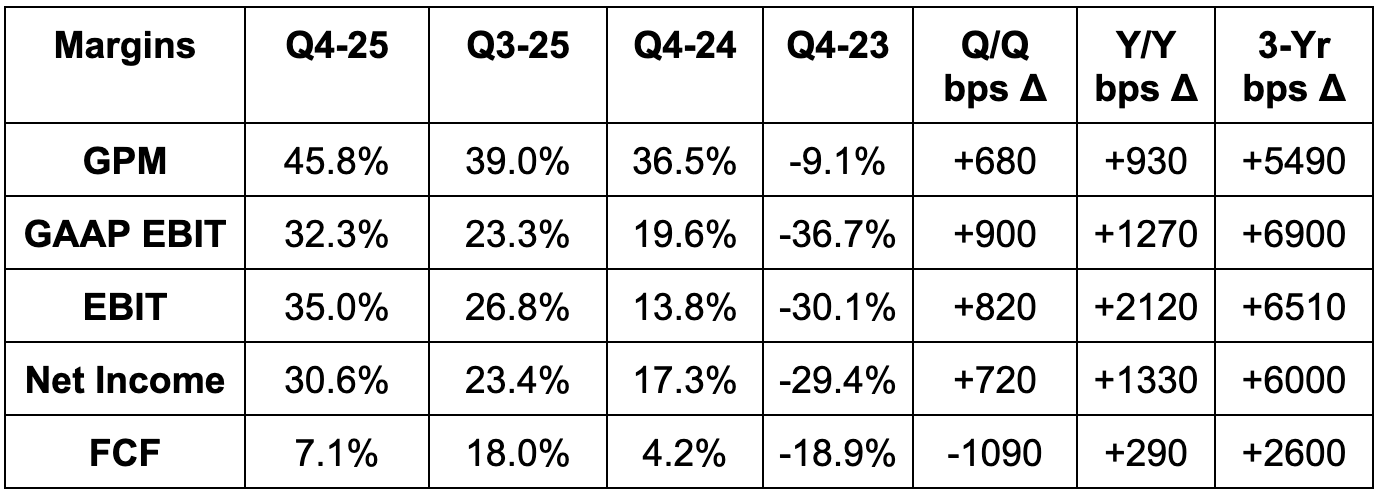

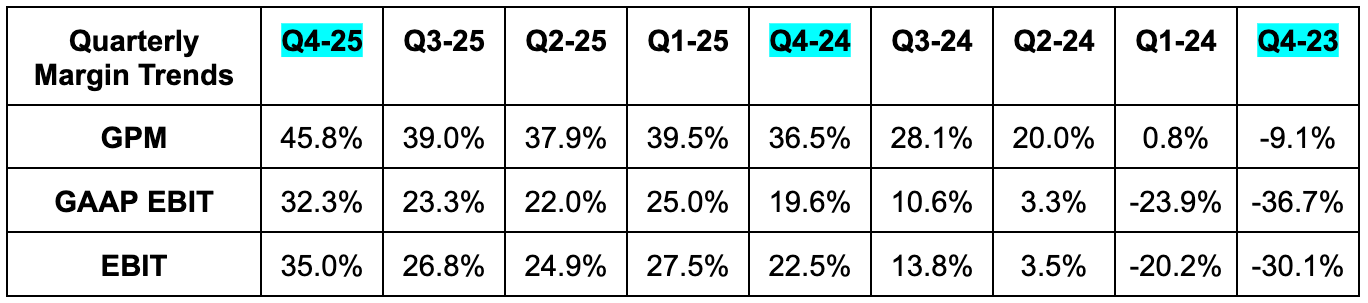

d. Profits & Margins

- Beat 44.1% GPM estimate by 160 bps & beat guidance by 120 bps.

- Beat EBIT estimate by 7.7% & beat guidance by 5.2%.

- Cloud memory EBIT margin was 48% vs. 46% Q/Q and 33% Y/Y.

- Data center EBIT margin was 25% vs. 20% Q/Q and 27% Y/Y.

- Beat $2.84 EPS estimate by $0.19 & beat guidance by $0.18.

- Beat $2.69 GAAP EPS estimate by $0.14 & beat guidance by $0.19.

e. Balance Sheet

- $10.3B in $ & equivalents.

- $1.6B in long-term investments.

- About $3.5B in untapped credit revolver capacity.

- Inventory -6% Y/Y.

- $14.6B in debt. Lowered total debt by $900M Q/Q via loan and note paydowns.

- Share count +0.5% Y/Y.

f. Guidance & Valuation

- Q1 revenue guidance beat estimates by 5%.

- Q1 EBIT guidance beat estimates by 23%.

- Q1 51.5% GPM guidance beat estimates by 600 bps.

- Q1 $3.75 EPS guidance beat $3.05 estimates by $0.70.

- FY 2026 CapEx is expected to be somewhere around $18B. That’s in line with expectations. Finally, leadership sees FCF generation materially improving Y/Y next year. This was expected.

It raised calendar year (not the same as fiscal in this case) 2025 DRAM bit growth. It still guided to high-teens growth, but called this new guidance “somewhat higher.” For NAND calendar 2025 bit growth, it raised growth from 11%-12% to around 14% Y/Y. Supply growth will lag demand growth for the rest of the year, which should support strong pricing. For calendar year 2026, they believe that supply dynamics for DRAM will remain very tight while demand conditions for DRAM and NAND “strengthen.” Over the long haul, they continue to forecast ~15% DRAM and NAND growth.

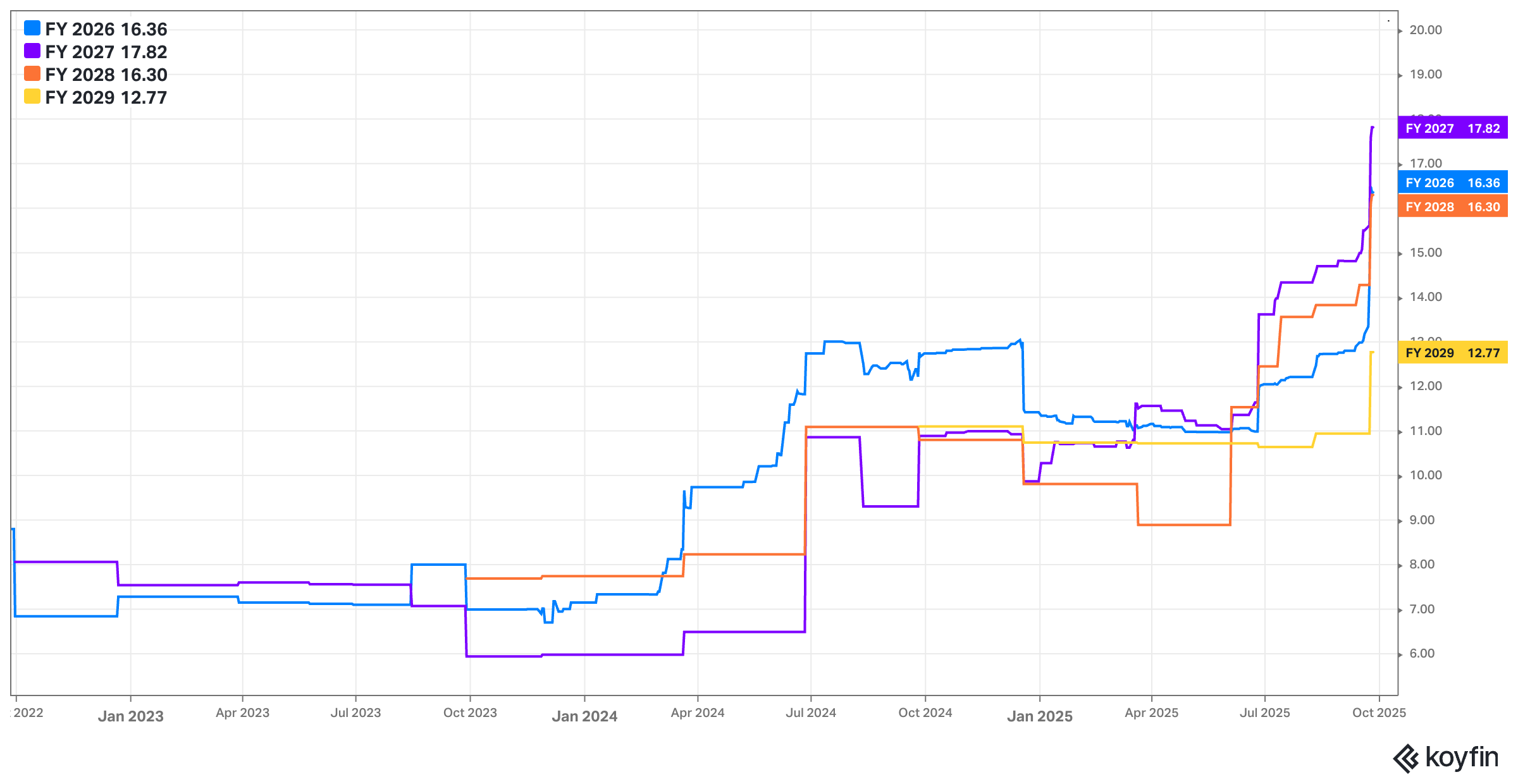

Micron trades for 11x forward EPS. EPS is expected to grow by 97% this year and by 9% next year.

g. Call & Release

AI Internal Use:

Micron talks a lot about AI creating voracious memory demand growth. While that’s certainly true, the company, like so many others, is also using this AI internally to make its own operations more efficient. They’re enjoying a 35% coding productivity uplift and a rapid acceleration to design simulation cadence. The company is also notching a 5x increase to wafer image analysis capacity and boosted yield performance by expanding the amount of data it can ingest to monitor its fabs.

Data Center – DRAM Server Demand:

For this calendar year, Micron raised server unit growth expectations from roughly 5% to 10%. The outperformance is coming mainly on the DRAM side. Within DRAM, both traditional and AI server demand surpassed leadership expectations and contributed to this raise. While it’s interesting that traditional server demand is accelerating, that’s actually also partially related to AI. The explosion in AI agents indirectly expands usage of these legacy workloads for their own multi-step workflows. And while the agents themselves are autonomous and AI-based, they’re still using traditional software to complete work on a user’s behalf. Finally, demand for traditional server-based apps is also strengthening. So… to summarize... everything is going better than expected throughout the entire DRAM business.

- Generally speaking, AI agent proliferation is also leading to a lot more overall data demand and processing. That means more demand for Micron’s memory and storage offerings.

- While the company remains upbeat on its NAND segment, a lot of the strength is coming from the DRAM side.

- The data center business is now 56% of total revenue.

Data Center – HBM DRAM Subsection:

The most exciting part of this business remains the HBM bucket. This is a specific, vitally important and high-performance piece of an overall server rack that routinely complements GPU leaders like Nvidia and AMD, as well as ASIC leaders like Broadcom. This is what enables rapid workload and task completion to occur within those racks and data centers.

HBM revenue reached nearly $2B for the quarter as rapid growth continued. It again took significant market share and is perfectly on track for HBM share to reach overall DRAM share by the end of this current month. Promises made… promises kept.

Micron now has 6 large HBM customers and agreements (with set pricing) for the “vast majority” of its HBM3 and HBM3E supply through calendar 2026. Again… Micron’s business is wildly cyclical. This is a fantastic level of visibility they’re able to provide shareholders vs. the norm. And that’s mainly because of the strong AI proliferation tailwind for its HBM business.

HBM Roadmap:

Its HBM4 12-high product is on schedule for customer delivery. Product sampling is pointing to “industry-leading bandwidth at lightning-fast pin speeds over 11 gigabits/second.” That is impressive. They believe this is a best-in-class product for power efficiency and overall performance and see their packaging and manufacturing processes as durable sources of product differentiation. HBM4E will feature an option to customize the base logic die. This is one of the chips within the HBM package used for data routing and processing. Taiwan Semi is helping a lot with this. The added customization capabilities should support more granular product-market fit, some incremental demand and higher gross margin for MU compared to its standard offerings.

They’re in “active discussions” with customers for HBM4 shipments and think they’ll “sell out the remaining HBM capacity for calendar 2026 in the coming months.” While that’s encouraging, it means they’re not sold out 15 months into the future. They were sold out 18 months into the future a few quarters ago. As discussed last quarter, this could be because performance verification processes for HBM4 have taken longer than HBM3. It could also be a preliminary sign of insatiable AI infrastructure demand finally beginning to cool. Hard to tell.

Its 1-gamma node (manufacturing process), which deploys ASML’s EUV technology to bolster bit density, efficiency and performance, “reached mature yields in record times.” This was 50% faster than the previous 1-beta node. MU is the first company in the memory space to ship and monetize chips using 1-gamma with a hyperscaler. 1-gamma boosts density by 30%, lowers power consumption by 20% and boosts performance by 15% compared to HBM chips made through 1-beta.

- Most bit supply growth during FY 2026 will be driven by 1-gamma.

More on DRAM:

For the mobile segment, its Low Power Double Data Rate 5 (LPDDR5) offering enjoyed 50% Q/Q growth. Its close partnership with Nvidia is unlocking a lot of this traction, as MU’s low-power DRAM offering has been adopted by Grace-Blackwell. While LPDDR5 has been popular for mobile use cases, it’s gaining some popularity for some AI data center workflows. Its Graphics Double Data Rate 7 (GDDR7), which is used for both gaming and high-performance compute (HPC) AI functions is also enjoying strong demand and providing best-in-class power efficiency for AI systems.

DRAM supply overall, for HBM and elsewhere, remains tight while demand remains strong. That should support growth with strong margins over the coming quarters.

- Its high-capacity dual in-line memory modules (DIMMs) provide another approach to compute memory, with a focus on higher capacity vs. higher bandwidth and efficiency for HBM. It has higher latency, and is more common for PCs than AI use cases for HBM.

- HBM + DIMMs + Low Power DRAM is now a $10B annualized business for Micron vs. $2B Y/Y.

NAND:

Its NAND SSDs reached record market share, while mix-shift to data center revenue led to stronger margins. MU’s NAND suite is becoming increasingly popular for vector-based search (formatted in a way so AI models understand) and data cache tiering to minimize data storage costs. This is driving market share gains and higher-quality revenue. AI inference is still in its infancy and traction for these specific use cases should mean Micron’s NAND takes an encouraging piece of that memory demand opportunity.

Its newest G9 NAND memory chip technology is “progressing nicely” and responsibly growing with industry demand. They’ve been very careful to limit (and even reallocate) some NAND supply growth in favor of more DRAM supply growth. They are fixating on avoiding large supply gluts to surface here in the near future.

- Launched the 6th generation of its NAND SSDs, which are the first NAND-based SSDs purpose-built for data centers.

- Qualified its G9 product for both triple-level cell enterprise storage and quad-level cell enterprise storage via performance verification completion.

Manufacturing Footprint:

- Secured a key CHIPS Act grant after surpassing a key building milestone for its Idaho Factory (ID1). Initial production is set to begin during the second half of calendar 2027.

- It’s now well underway on design specs for its second Idaho fab (ID2). That will begin contributing to DRAM capacity after 2028.

- The first pieces of its Environment Impact Statement for the planned New York fab are done.

- Installed an ASML Extreme Ultraviolet machine in its Japan fab to pave the way for its 1-gamma production. This will complement 1-gamma supply capacity that’s available in their Taiwan facility.

- Micron is getting a lot faster at fielding and deploying these machines.

- The Singapore HBM facility is on track.

Other Segments:

- Raised PC unit shipment growth guidance for calendar year 2025 from about 2%-3% to about 5%.This is due to the Windows 10 end-of-life and AI-driven demand.

- Its NAND SSDs for personal computers verified performance and other important KPIs with customers during the quarter.

- Reiterated about 2%-3% Y/Y growth for calendar year 2025 smartphone shipment expectations.

- Embedded demand is now being materially supported by drones, robots and AR/VR systems.

- Auto and industrial-related demand were both better than expected.

h. Take

Good quarter. They continue to sell everything they can make within HBM and do so with strong pricing. Market share trends are positive, the cycle is zooming and Micron is taking full advantage. I would have loved to hear that 2026 HBM capacity is also sold out. That would scream no end in sight to the current AI boom. While that lack of guarantee could be related to aforementioned slower performance verification, it could also mean insatiable demand is finally moderating.

The next few quarters will be quite telling as we gauge where we are in this supercycle. For now, they’re confident in multi-year 15% demand growth and favorable inventory dynamics carrying them through 2026. That bodes well for both demand and profits, while a newfound focus on internal AI automation adds another lever for margin expansion. Micron is adamant that their products lead the field in terms of performance. That means their results should look very good for as long as AI and macro tailwinds rage.

2. Nvidia (NVDA) – OpenAI & The AI Cycle

Nvidia is investing $100B in OpenAI. They'll get an equity stake and a lot more GPU sales in return. Good news for both companies. It does feel a little strange to be buying pieces of companies so you can sell them more GPUs. Some will call this shady and I do get why. The circular nature of these agreements is unorthodox and has spelled trouble in the past.

At the same time, this is the luxury of NVDA's balance sheet being so great. They can afford to finance a lot to accelerate adoption. Uber is doing something similar in terms of taking stakes in firms like Lucid to gain access to their AV fleets -- pushing that industry forward. That’s what Nvidia is doing here. They’re turning a willingness to fund and expedite a lot of the AI infrastructure rollout into direct equity stakes in arguably the most compelling AI company out there right now. Uber has also talked about eventually using 3rd parties to offload this balance sheet investment and Nvidia could eventually do the same thing if they need more cash (which they currently do not).

Nvidia surely has done the math and this is them telling us the large investment should be good for overall customer lifetime value and unit economics, while they also get a sizable stake in arguably the most important pure-play AI firm out there.

I expect more deals like this to be announced for as long as the massive commitments that unprofitable firms like OpenAI are making continue to be embraced. That desire still currently looks strong based on this massive investment and the billions we’ve seen tech providers invest in this company, Anthropic, Scale AI and others. As soon as that funding appetite begins to wane, deals like OpenAI’s $300B commitment to Oracle will get more difficult to execute. And incrementally gigantic deals like these will probably get less common. Until then… I think the bulls will likely party on. And when will that party end? No clue. Timing that peak is all but impossible and not the game I want to try playing.

3. Meta (META) – Fun Milestone & Ridiculous Growth & Alphabet

Meta’s Instagram crossed 3B users. Since crossing 2B in December 2021, the already gigantic user base has compounded at an 11% annualized clip. Let’s compare that to Pinterest. Pinterest had 431M monthly actives in Q4 2021. The base was a little more than 20% of Meta’s size. Since then? Their users have compounded at a 9% clip. Pinterest isn’t a bad company. Meta is just that good.

In other news, Meta is reportedly considering using Gemini models to enhance ad targeting precision. In a perfect world, Meta would have far and away the best models and it wouldn’t be compelled to do things like this. But the world isn’t perfect. Meta’s models are very good and especially purpose-built for the company’s own needs. At the same time, Gemini boasts the best general-purpose models on the planet. Combining usage and the most relevant models for your own business with the model leader should have materially positive impacts on impression pricing and engagement. And obviously for Alphabet, this is merely another sign of their impressive lead in the model-building race.

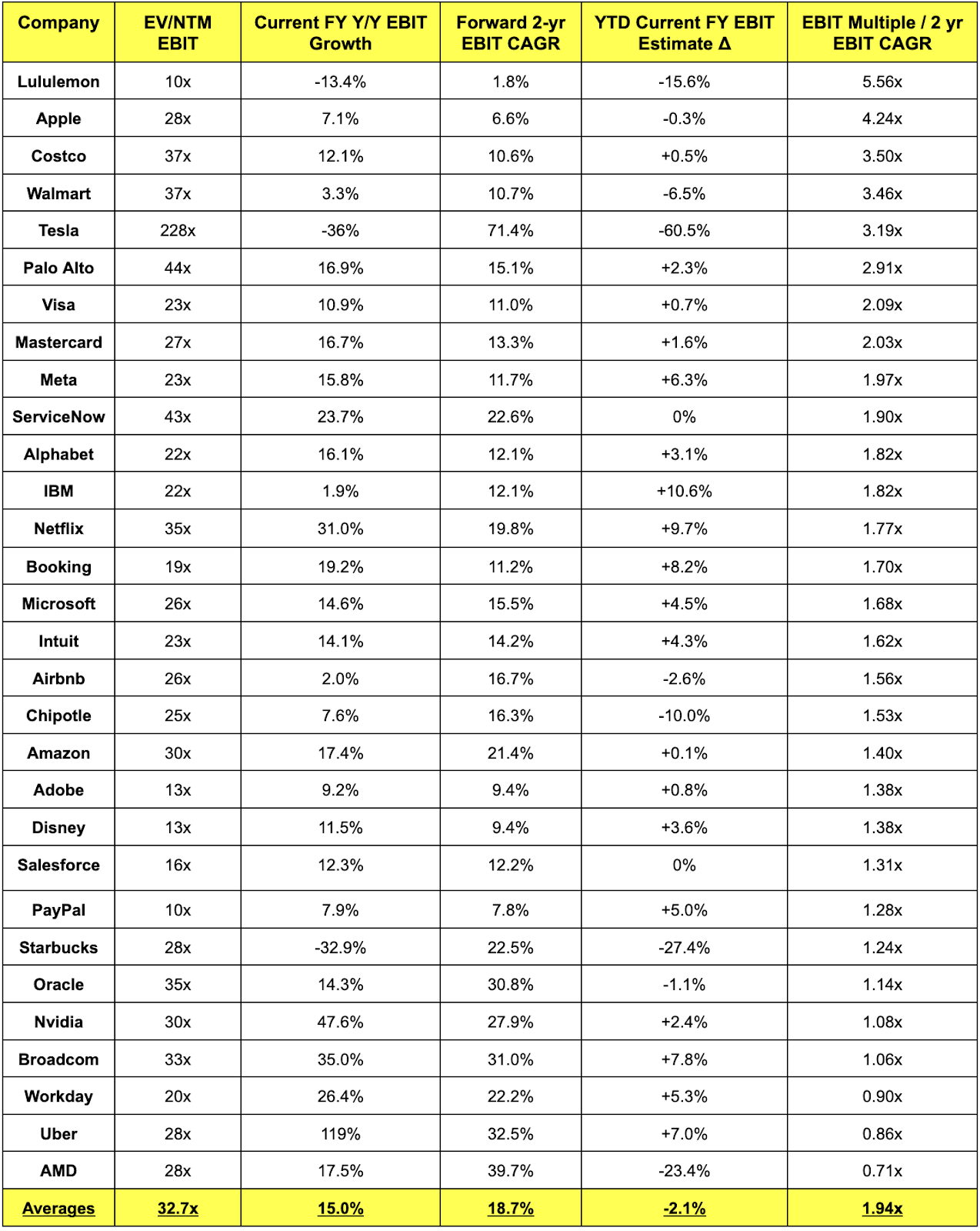

4. Income Statement Growth Multiple Comp Sheets

a. Mature Growth EBIT Comp Sheet

Notes:

- Most of these firms listed in the chart below do not make non-GAAP EBIT adjustments. A few of them do. For these firms, non-GAAP add-backs like stock compensation are very modest. It did not have a material impact on the data below, so I did not tweak anything.

- Multiples use consensus next 12-month profit expectations.

- 2-year EBIT CAGR uses next fiscal year and the following fiscal year for each company

- The rightmost column is a variation of Peter Lynch’s PEG ratio framework. I prefer using multiple years of profit growth.