I’ve removed the paywalls for the Zscaler & Nvidia earnings reviews. A lot of our content has shifted to paid, and I like to occasionally do this as a thank you to free subscribers for your continued interest. I know the paywalls are annoying, but they’re unfortunately necessary to provide my livable salary so that I can do this full time. Thanks for your understanding and enjoy the following reviews! There are 38 more from this earnings season available to read on the website.

Table of Contents

1. Zscaler (ZS) — Earnings Review

a. Zscaler 101

Zscaler is a large player in network security. It competes with Palo Alto’s next-gen suite, Cloudflare and many others. Zscaler’s Zero Trust Exchange (ZTE) is its overarching, cloud-native security platform. It blazes a trail between users, apps and devices across eligible networks, while securing data at rest and in motion. Zero Trust is exactly what it sounds like: never trusting a device or end user. The exchange vets and verifies all traffic as it moves within a company’s perimeter. It doesn’t allow bad actors to breach infrastructure weak spots and gain free access to everything else thereafter. That’s called “lateral threat movement.” It never trusts (as the name indicates) and constantly verifies. Zscaler uses risk scores to assess needed levels of security for requests. That makes sure it’s only creating user friction when there’s actual security concern. This Zero Trust approach routinely cuts infrastructure costs for customers by shrinking the attack surface down to grant more granular permission.

ZTE replaces an antiquated firewall and virtual private network (VPN) setup in which fixed rules determine entry into the firewall-protected environment. Once that entry is granted, every device & user within a perimeter gets perpetual and unconditional access. I think it’s clear to see how that could be more problematic than ZTE’s approach.

Zscaler Network Security Products:

- Zscaler Internet Access (ZIA) protects internet connections. It’s the middleman between a user and a network that ensures proper authorization & access.

- ZIA routinely displaces legacy secure web gateways (SWGs) and firewalls.

- Zscaler Private Access (ZPA) offers remote access to internal apps. This upgrades VPN utility by “connecting directly to required resources without public exposure,” per Zscaler filings.

- Zscaler Digital Experience (ZDX) ensures high quality and always-on performance of cloud apps. It sifts through networks to identify sources holding back productivity to be fixed.

- This includes application performance monitoring, some endpoint monitoring tools and more.

- Zscaler for Users is the firm’s bundle that combines ZIA, ZPA and ZDX.

For newer network products, Zero Trust Segmentation localizes and separates networks. This treats individual stores/factories/buildings as secure islands to prevent open sharing across locations. Branch security treats every single company location as its own Island or network to shrink the attack surface. These “islands” are connected only when needed. Both branch security and segmentation work very closely together to lower the risk of lateral threat movement.

Key Zscaler Network Performance Products:

- Virtual Desktop Infrastructure (VDI): Allows software to be accessed on remote devices. ZTE ensures this is done safely and securely.

- Software-Defined Wide Area Networks (SD-Wan): Digital manager of network connectivity. It splits network hardware and software-based control. This cuts hardware and network costs, streamlines management & augments protection. This replaces Multiprotocol Label Switching (MPLS).

- ZS’s SD-Wan offering is zero trust-based.

- Software-based management paired with Zscaler’s Zero Trust approach allows for seamless connection to remote branches, contractors and data centers.

- Virtual Private Cloud (VPC): These are subsections of public cloud environments. They offer users more autonomy with their network and apps. They also allow for secure connections between cloud and self-hosted (on-premise) environments with no public network exposure. This is especially key for highly regulated industries.

Network Security + Network Performance:

Secure Access Service Edge (SASE) provides an overarching, bundled suite of network security and performance tools. Secure Service Edge (SSE) is the security component of SASE.

Security Operations (SecOps) & IT Operations (ITOps) Products:

- Unified Vulnerability Management (UVM). This offers a birds-eye view to tag, assess and remediate vulnerabilities across all cloud environments and assets. It ranks all issues, prioritizes pressing items and offers the best course of action for remediation.

- Part of SecOps

- Risk360 flags vulnerabilities and offers end-to-end risk quantification with intuitive next steps for remediation.

- Part of SecOps

- Business Insights: Broad visibility into app usage, costs, needs and engagement. This helps minimize unneeded apps and licenses.

- Part of SecOps

- ZDX Copilot is its GenAI assistant designed to detect and resolve network performance issues on its own.

- Part of ITOps.

Pure Cloud Security Offerings (could call most of its product suite “cloud security”):

Zscaler also offers configuration analysis and cloud workload protection products.

Key Zscaler Data Products:

- Data security posture management (DSPM) granularly tags, organizes and protects cloud-native data.

- Data Loss Prevention (DLP) guards clients against data leakage or theft. This works for email, cloud, web, endpoints and more.

- Data Fabric for security is how Zscaler collects and combines needed context from 1st and 3rd party sources. This improves overarching security visibility to ensure proper hygiene, strong risk management and timely remediation.

- Its unified suite of data products is called Zscaler Data Everywhere.

Zscaler offers data security across endpoints, email, web, GenAI apps, legacy software and so much more. This is an important newer company priority. If it’s already protecting so much of the world’s network traffic… and if it already collects and leverages all of this data (structured and unstructured) in its massive, scalable security data lake… that gives it a head start on using this lucrative insight to offer more products.

Tying it all together:

Zero Trust Everywhere is the latest and greatest iteration and branding of its Zero Trust Exchange. It includes all products within ZScaler for Users, all cloud security offerings and the Zero Trust Branch product. It features a communicative focus on holistic, complete coverage. Like Palo Alto with “Platformization,” as well as CrowdStrike and most cybersecurity firms in various parts of the sector, Zscaler is reorganizing its offering to drive easier platform-level adoption. In turn, that means better data sharing, better outcomes, lower costs and loyal customers more reliant on your bundle.

b. A Quick Reminder & Key Points

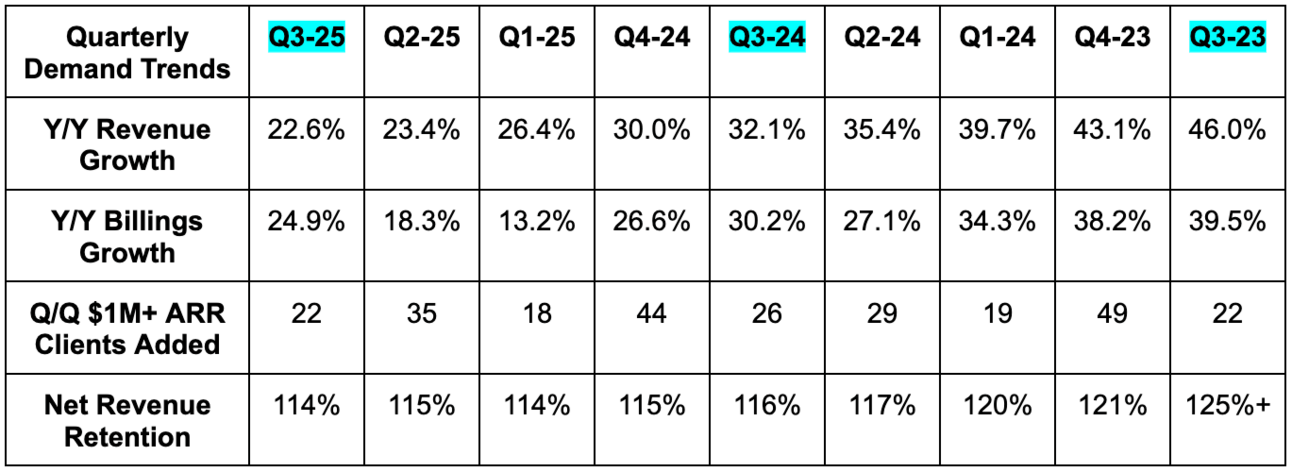

As discussed for the last 9 months, Zscaler signs 3-year contracts with customers. Macro headwinds during this past cycle were strongest over the first half of 2022 and the first half of 2023. Meaning? That weakness was reflected in its scheduled, contracted billings growth during Q1 and Q2. Macro headwinds abated during the second halves of both 2022 and 2023, which is why it guided to 7% billings growth during the first half of this year and 23% growth during the second half. As you’ll see below, it executed. And? That shouldn’t be a surprise. It’s always uncomfortable to bank on future accelerations to meet guidance. In this case, however, its forecast was not related to optimism or aggression, but observed data. Zscaler’s billings contracts are non-cancelable; it rightfully based its second half optimism on already signed business and pipeline conversion strength.

c. Demand

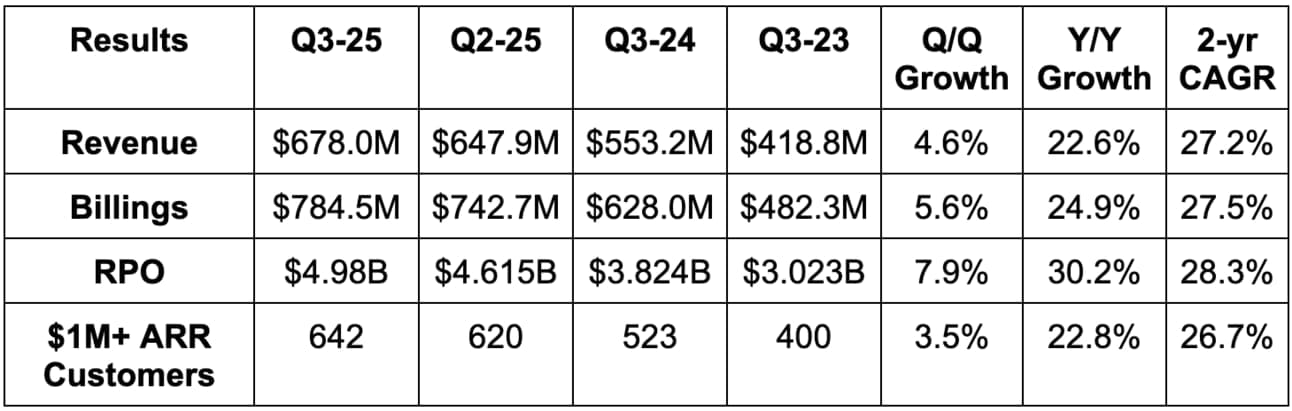

- Beat revenue estimate by 1.7% & beat guidance by 1.8%.

- Beat billings estimate by 3.1%.

- Beat $1M+ ARR customer estimate by 0.3% (642 vs. 640 expected).

- Missed $100,000+ ARR customer estimate by 1% (3,363 vs. 3,410 expected).

- ARR rose 23% Y/Y to roughly $2.9B.

- Beat remaining performance obligation (RPO) estimate by 4.1%.

- Net revenue retention (NRR) missed 115% estimates by a point. This was related to landing larger initial deals with customers. Great reason for a miss.

Demand performance was rightfully praised by analysts on the call. Sales productivity continued to rise, as Zscaler’s selling changes have worked more expediently than for others like SentinelOne. Bookings crossed $1B for Q3 for the very first time and new client momentum resulted in phenomenal 40% Y/Y annual contract value (ACV) growth. And as leadership told us to expect all year, scheduled billings growth recovered to the low-20% range. Additionally, outperforming unscheduled billings growth came in at 28% Y/Y.

d. Profits & Margins

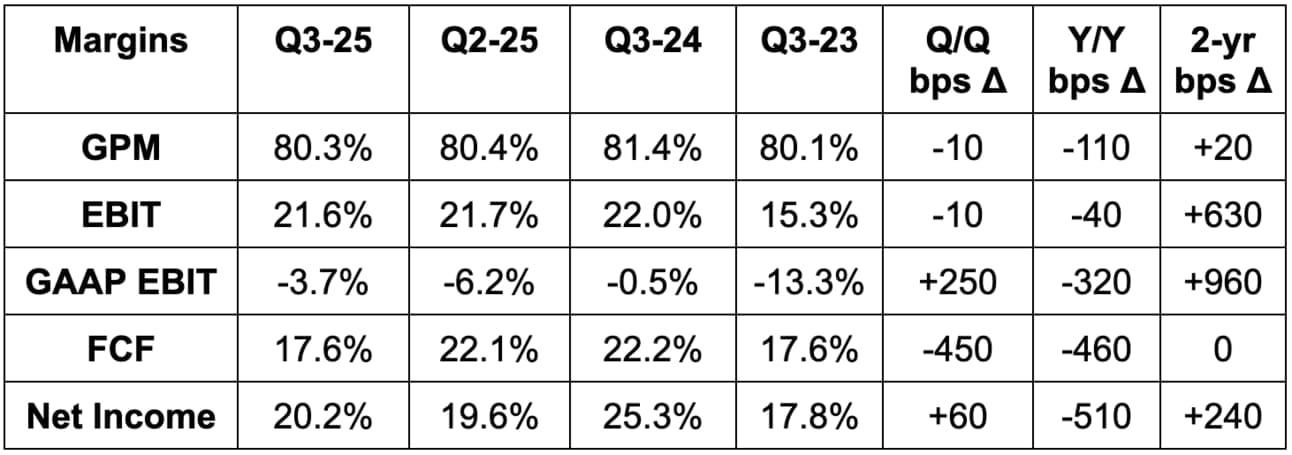

- Met GPM estimate.

- Beat EBIT estimate by 3.7% & beat guidance by 4.0%.

- Beat EPS estimate by $0.08 & beat guidance by $0.085.

- Beat -$0.14 GAAP EPS estimate by $0.11.

- Beat FCF estimate by 3.5%.

Zscaler’s team has been clearly communicating their present focus on pace of product introduction. They are not prioritizing margin optimization for all of these new offerings, and are confident they can do that work over time.

e. Balance Sheet

- $3B in cash & equivalents.

- 0.5% Y/Y share dilution.

- No traditional debt.

- $1.15B in convertible senior notes.

f. Guidance & Valuation

- Raised annual billings guidance by 0.8%. The amount of the annual raise was $2.3M larger than the Q3 beat, implying improved Q4 expectations.

- Slightly raised Q4 revenue guidance by 0.1%, which slightly missed estimates by 0.1%. Rounding errors.

- Raised Q4 EBIT guidance by 1.1%, which slightly beat estimates by 0.3%.

- Raised $0.76 Q4 EPS guidance by $0.025, which beat estimates by $0.015.

- Reiterated at least $3B in ARR for the year.

g. Call & Release

M&A, an Expanding Platform & the Security Operations Center (SOC):

Zscaler is buying Red Canary for $675M in cash and some equity compensation. Estimates for the overall deal value range from $800M to $1B. Red Canary is a well-established managed detection and response (MDR) vendor. This means it handles vulnerability and breach detection, as well as helping with remediation. The company deploys a blend of security analysts and AI to accomplish this as a needed way for clients with finite security budgets to be covered without exploding costs. With the deal, Zscaler also gets an injection of threat intelligence talent and what it calls “sophisticated agentic AI tech for reasoning and workflows.” Red Canary is an MDR leader per Forrester and has lofty Gartner peer review scores.

Perhaps most importantly, MDR gives Zscaler a vital missing ingredient it thinks it needs to be a client’s Security Operations Center (SOC). SEC is essentially an aggregated hub of security coverage for all needed assets in one place. It relies heavily on 3 things. The first two are great security software, which ZTE provides, and scalable, organized, non-siloed access to information, which its data lake provides.

IT operations (ZDX Copilot) paired with SecOps (Risk360, Business Insights and UVM) is the third ingredient. ZScaler already had some offerings here, but it was missing MDR. Red Canary’s MDR product is the final piece of the SecOps puzzle, as it removes the talent bottleneck that countless Zscaler clients face when trying to fully cover their enterprises. With Red Canary, ZS will “accelerate time to having a comprehensive SOC solution by 12-18 months.” And fortunately (not coincidentally), Red Canary also has a veteran go-to-market team that “knows SOC buyer personas and can act as a specialist team to partner closely with the larger go-to-market engine.” In my opinion, this is an excellent purchase. Winning that SOC status with customers means heavier reliance on Zscaler, more product uptake, higher retention rates and faster growth. It’s important.

And while Zscaler still needs to integrate Red Canary to have the holistic SOC offering it desires, SecOps momentum is already strong. Annual contract value (ACV) for the bucket rose by more than 120% Y/Y.

“By combining Zscaler’s high-volume and high-quality data with Red Canary's domain expertise in MDR, Zscaler will accelerate its vision to deliver AI-powered security operations.”

Presentation

More on Platform-Wide Adoption:

Zero Trust Everywhere customers rose 60% Q/Q to 210, as momentum for this product messaging is quite strong early on. This continues to deliver a 200% return on investment (ROI) for a subset of customers and displace the need for several legacy network tools. Simply put, ZTE is a powerful vendor consolidator, a capable cross-selling tool, an impactful retention tool, and, more generally speaking, an instrumental piece of platform-wide product adoption.

For signs of new product momentum, its 3 emerging growth categories crossed $1B in annual recurring revenue (about 35% of total) and comfortably outpaced overall company growth. As a reminder, these three categories are:

- Zero Trust Everywhere.

- Data Security Everywhere (unified suite of its data products)

- Agentic Operations, which include all SecOps and ITOps products.

Within Zero Trust Everywhere, Zero Trust Branch was purchased by 59% of new customers, showing how quickly it can turn launches into revenue. Most of these logos are starting small and offer large upsell opportunities.

“Frankly, the degree of interest the customers have taken in this area has exceeded my expectations.”

Founder/CEO Jay Chaudhry

For security and IT operations, UVM + Data Fabric management won them a 7-figure deal for 400,000 client assets. ZDX Copilot has also fostered 70% growth in ZDX Advanced Plus bookings since its launch 13 months ago.

Pertaining to data, its Data Fabric suite was a key contributor for a Fortune 100 new logo and a Fortune 50 upsell, which raised annual spend for a large automaker by 50% Y/Y (well into 8 figures). They now use 6 of ZS’s 8 data modules. Its GenAI data security product netted a global 2,000 company, a leading fleet management firm and a federal customer. To keep momentum revving, it is expanding its GenAI public app product to private apps and adding malicious prompt injection (intentionally giving models bad info) coverage.

Platforms Win the Big Boys:

Evidence of being a true platform can be seen in large client momentum. Aside from the $1M+ ARR client beat, 45% of Fortune 500 members are ZS customers vs. about 40% Y/Y. T-Mobile was a key win during the quarter, as it’s modernizing its entire ecosystem with the help of ZTE. Other notable wins this quarter included a 7-figure big bank deal. The client displaced several legacy vendors with Zero Trust for Users and cloud workload protection after initially trusting Zscaler to secure its apps. I guess they were pleased.

“While legacy vendors are attempting to cobble together disjointed point products and calling it a platform, we are constantly expanding our core Zero Trust Exchange by integrating new functionality to solve more and more of our customers' security concerns.”

Founder/CEO Jay Chaudhry

Why a Scaled Platform Creates Competitive Differentiation:

We talk about this all the time. Apps and models are only as good as the data they’re trained on. Zscaler sees 500B transactions and processes 20 petabytes of data per day. Great products got them here… and now this traction spins a compelling flywheel. Leading network and cloud security scale means leading access to data to keep driving more product improvements and new introductions. That process naturally attracts more customers, while a larger product suite means bigger, stickier contracts. In turn, this means even more data to keep the engine humming.

Tweaked Go-To-Market to Unleash More Platform-Wide Adoption:

Zscaler is tweaking go-to-market in the exact same way CrowdStrike did. They’re not even hiding it either, as they’re calling the new selling motion “Z-Flex.” CrowdStrike’s design is called Falcon Flex. And while I find this funny, I also think it’s a great decision. CrowdStrike has proven that Falcon Flex can be a powerful enabler of giant deals. It’s the right decision to emulate this approach.

Z-Flex enables flexible usage of contractual commitments, with an ability to front or back-load usage if needs change. It’s easy to swap which models a client is using, making it simple for them to experiment and learn about the other utility Zscaler’s platform provides. This can now happen without a formal procurement process every time a customer wants to change something. Z-Flex offers the same volume-based discounts that Falcon Flex does, as well as full-service deployment and support. It has already netted a $6M upsell to a $13M per year client, which expanded usage of 12 modules and added 8 more modules. This was one of two Fortune 500 wins for Z-Flex during the quarter, which, all in all, already has $65M in bookings just a few weeks into launching.

This is one of the reasons why billings disclosures will be replaced with annual recurring revenue (ARR) disclosures starting next year. Flex is changing billings patterns and making that a less reliable indicator for growth, as quarterly usage is less tied to annualized demand.

“So the Z-Flex program came out of our customers' desire to buy more, but not having to go through negotiations and procurement every time.”

Founder/CEO Jay Chaudhry

AWS:

Zscaler was added to AWS’s Marketplace for the U.S. Intelligence Community (ICMP). This unlocks access to significantly more public sector opportunities that funnel through AWS’s ecosystem.

Leadership:

Zscaler named Kevin Rubin as their new CFO. He’s a veteran executive who has been a CFO since 2005. He led companies to successful exits and, most notably, was Alteryx’s CFO for 9 years.

“I firmly believe his recent eight-year tenure as a CFO of a data analytics company will be crucial to Zscaler in our next phase of growth, which will be driven in large part by the combination of Zero Trust and AI security.”

Founder/CEO Jay Chaudhry

Zscaler also added Raj Judge to its board and named him as its new EVP of Corporate Strategy and Ventures. He was at Wilson, Sonsini, Goodrich & Rosati, a world-renowned law firm, for over 30 years.

Macro:

Zscaler did not see any demand softening in April like SentinelOne cited last night. Although they’re not direct competitors in most places, they do experience the same structural tailwinds. Zscaler’s relative resilience is a byproduct of excellent software that lowers total cost of ownership and thriving go-to-market. Both of those things are needed.

Zscaler has been quite proactive in focusing on cost reduction messaging for customers considering a migration. Cost reductions aren’t just popular in times of uncertainty… they’re especially popular. Customer deal scrutiny didn’t improve (or worsen) quarter over quarter, Zscaler simply overcame stable headwinds better than others could.

h. Take

Very good quarter. Everything here looks good and Zscaler is not seeing the same ramping macro headwinds SentinelOne cited last night. Simply put, I think that has a lot to do with better execution. Zscaler is driving impactful product innovation and effectively selling it. It’s greatly expanding its overall opportunity through ZTE extensions, cloud security, data security and its push to be a client’s core SOC. I don’t really worry about this holding often. It’s a wonderfully boring compounder in a wildly compelling space where it just keeps taking more market share. The multiple is getting a bit stretched, but I’m not quite ready to trim and take any profits at this stage. I’ll keep you posted in real-time, as always.

2. Nvidia (NVDA) – Earnings Review

a. Nvidia 101

Nvidia designs semiconductors for data center, gaming and other use cases. It’s unanimously considered the technology leader in chips meant for accelerated compute and Generative AI (GenAI) use cases. While it specializes in chips, it does a lot more than that too. Its toolkit includes chips, servers, switches, networking and cutting edge software. It designs the entire next-gen data center layout with slick software integrations so customers can enjoy the best of accelerated compute. Nvidia calls these data centers “AI factories.”

The following items are important acronyms and definitions to know for this company:

Chips:

- GPU: Graphics Processing Unit. This is an electronic circuit used to process visual information and data.

- CPU: Central Processing Unit. This is a different type of electronic circuit that carries out tasks/assignments and data processing from applications. Teachers will often call this the “computer’s brain.”

- Hopper: Nvidia’s modern GPU architecture designed for accelerated compute and GenAI. Key piece of the DGX platform. Blackwell is the next platform after Hopper. Rubin will come after Blackwell.

- H100: Its Hopper 100 Chip. (H200 is Hopper 200).

- Ampere: The GPU architecture that Hopper replaces for a 16x performance boost.

- L40S: Another, more barebones GPU chipset based on Ada Lovelace architecture. This works best for less complex needs.

- Grace: Nvidia’s new CPU architecture that is designed for accelerated compute and GenAI. Key piece of the DGX platform.

- GH200: Its Grace Hopper 200 Superchip with Nvidia GPUs and ARM Holdings tech.

- Intuitively, GB200 means Grace Blackwell 200.

Connectivity:

- Nvidia Link Switches: Designed to connect Nvidia GPUs within one server. GPU connections power great efficiency, performance and computing scale (so cost advantages).

- The newest Blackwell system allows for 144 total GPUs to be connected (several factors higher than Hopper).

- InfiniBand: Interconnectivity tech providing an ultra-low latency computing network. This can connect larger batches of accelerated compute clusters for more scalability.

- Spectrum X: Newer networking switches for large-scale, Ethernet-only AI.

- This can connect 100,000 Hopper GPUs, like XAI did with its Colossus Supercomputer. Nvidia wants to soon push that to the millions.

Software, Models & More:

- NeMo: Guided step-functions to build granular GenAI models for client-specific needs. It’s a standardized environment for model creation.

- Cuda: Nvidia-designed computing and program-writing platform purpose-built for Nvidia GPUs. Cuda helps power things like Nvidia Inference Microservices (NIM), which guide the deployment of GenAI models (after NeMo helps build them).

- NIMs help “run Cuda everywhere” — in both on-premise and hosted cloud environments.

- GenAI Model Training: One of two key layers to model development. This seasons a model by feeding it specific data.

- GenAI Model Inference: The second key layer to model development. This pushes trained models to create new insights and uncover new, related patterns. It connects data dots that we didn’t realize were related. Training comes first. Inference comes second… third… fourth etc.

DGX: Nvidia’s full-stack platform combining its chipsets and software services.

b. Important Reminder & Key Points

As a reminder, last month, the federal government imposed license-based restrictions on Nvidia’s H20 GPUs. These were the lower-powered chips specifically designed to meet heightened export limitations imposed last year. Per Jensen, the new rules effectively shutter the Chinese market for Nvidia’s high-end chips; it does not see an ability to build compliant Hopper chips that would be competitive in that market. As a result, Nvidia incurred a $4.5B charge in Q1, as it had excess inventory that no longer was tied to expected demand. This was supposed to be $5.5B, but the firm was able to repurpose some raw materials.

It did not ship $2.5B worth of H20 chips during the quarter. Next quarter will feature a full 90-day impact of these H20 restrictions, which is why the expected charge will rise from $4.5B this quarter to $8.0B next quarter. There will likely be charges in Q3 as well. As we work through the financial parts of this piece, I will offer context adjusting for these temporary expenses.

Huang walked a fine line between praising the federal government and criticizing it. On the positive side of things, he complimented removing the AI diffusion rule, which opened several Middle Eastern markets for business. At the same time, he harshly criticized this specific Chinese restriction, as he thinks Chinese AI running on American infrastructure will help the USA lead this tech revolution. It would mean more developers and researchers optimizing for the U.S.-based models and would tighten this nation’s strong grip on the AI boom.

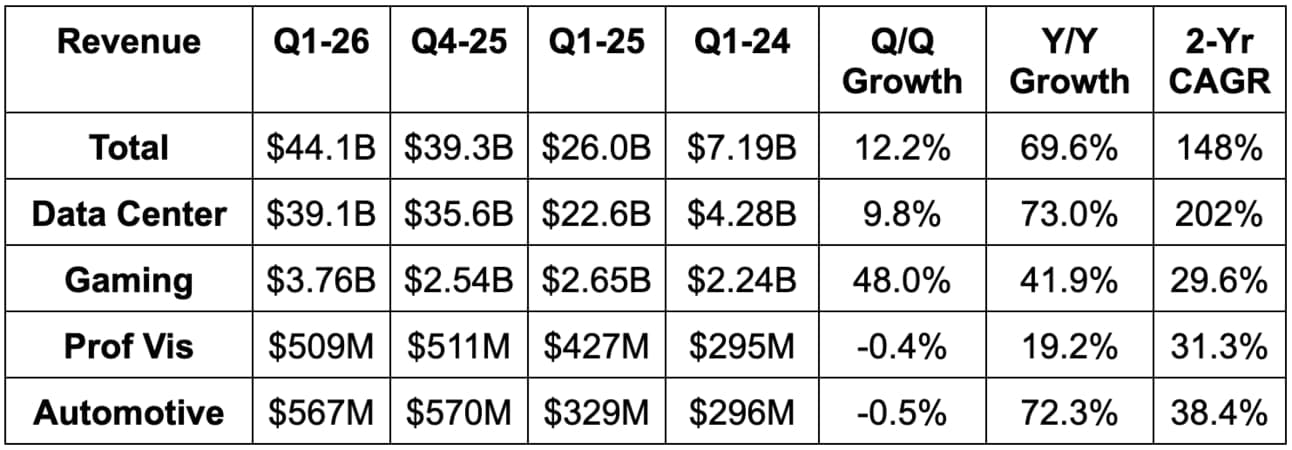

- Elite growth with elite margins at elite scale.

- The China headwind was a bit smaller than expected.

- It thinks the runway for AI chip demand remains massive.

- Sovereign AI is emerging as a large growth vector.

c. Demand

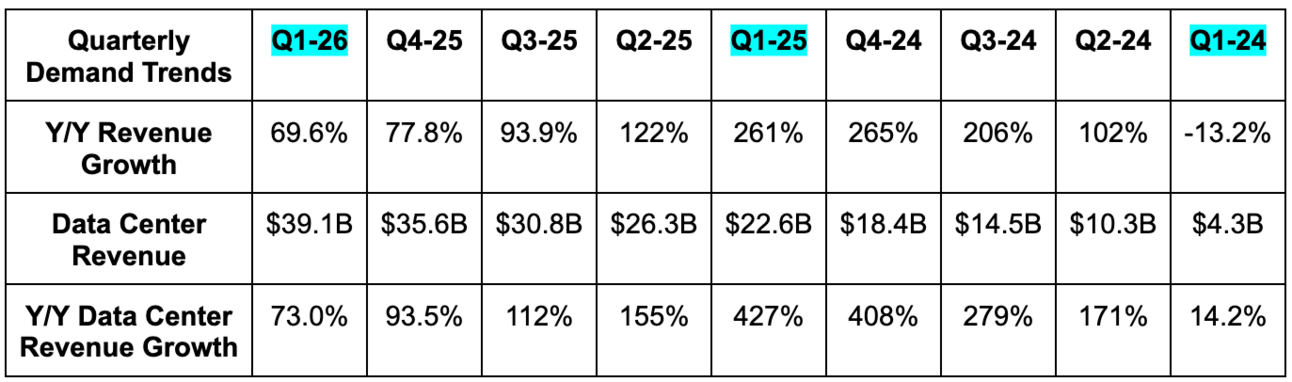

- Beat revenue estimate by 1.8% & beat guide by 2.6%.

- Slightly missed data center estimate by 0.3%.

- Missed $34.2B compute revenue estimate by 3.7%.

- Beat $3.5B networking revenue estimate by 42%.

- Beat gaming revenue estimate by 32%.

- Beat professional visualization (prof. vis.) revenue estimate by 0.8%.

- Missed auto revenue estimate by 2%.

d. Profits & Margins

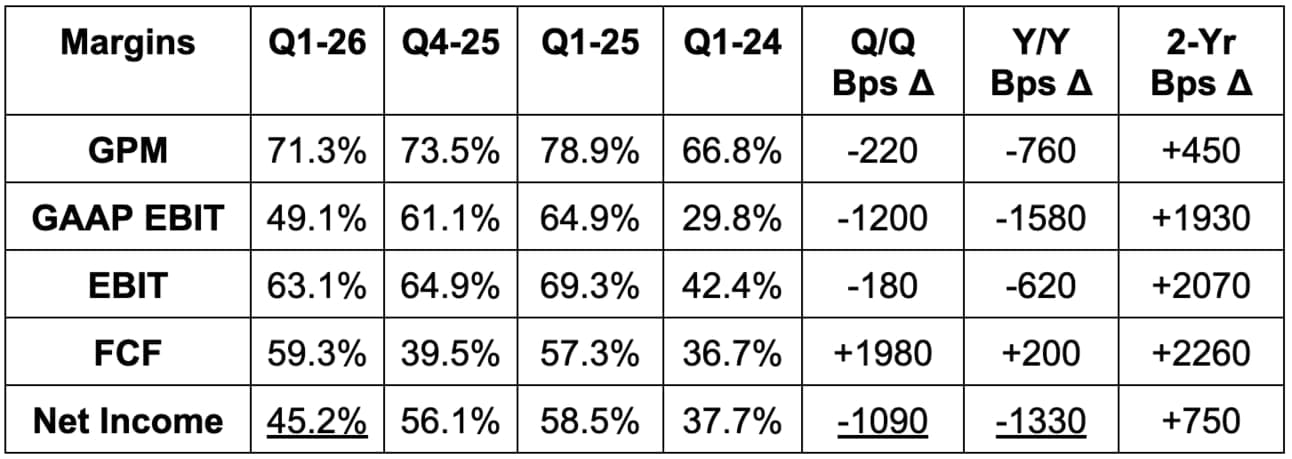

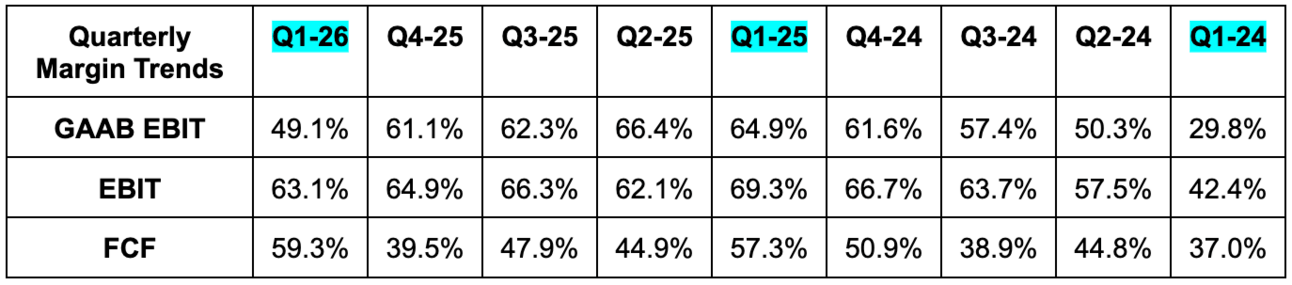

- Missed 67% GPM estimate by 6 points. Excluding the impact of the mid-quarter H20 export restriction, GPM was 71.3% and beat 70% ex-charge GPM estimate.

- The chart below adjusts for the $4.5B charge.

- Beat EBIT estimate by 8% and beat EBIT guidance (excluding the $4.5B charge) by 3.6%.

- The chart below adjusts for the $4.5B charge in the non-GAAP EBIT column.

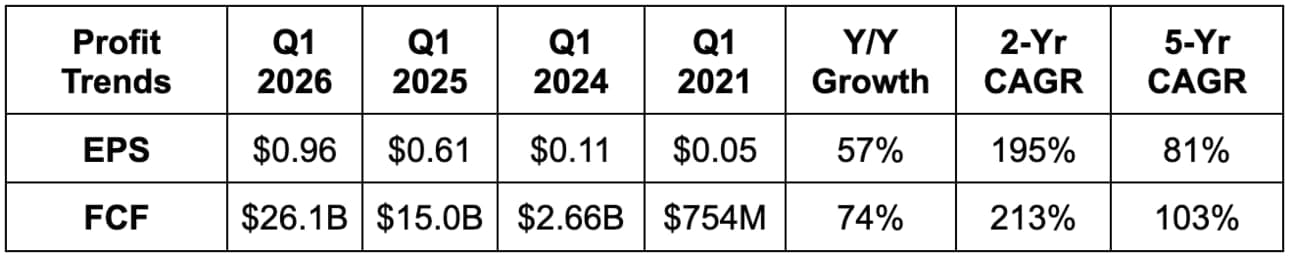

- Beat $0.75 EPS estimate by $0.06. Excluding H20 charges, it earned $0.96 per share, which beat the $0.93 ex-charge estimate.

- I left the net income column as is to offer a sense of how large of a margin headwind China was during the quarter.

- Beat FCF estimate by 29%.

e. Balance Sheet

- $53.7B in cash & equivalents.

- $11.3B in inventory vs. $10B Q/Q.

- $8.4B in long-term debt.

- Diluted share count fell 1% Y/Y.

f. Guidance & Valuation

Missed revenue estimate by 1.5%. This includes an $8B H20 headwind, which is $3B larger than expected. Had the headwind been as expected, revenue guidance would have been a little more than 5% ahead of consensus. It beat 71.7% GPM estimates by 30 bps and missed EBIT estimates by 1% – again related to the larger-than-expected China headwind. It continues to see a clear path to a mid-70% GPM this year.

g. Call & Release

AI Chip Boom Runway:

The main debate surrounding Nvidia today is about how long this current supercycle will last. When looking at reiterated massive CapEx budgets from mega-cap tech, new $40B chip orders from Oracle, the fact that agentic reasoning models consume 100x-1,000x more compute than their predecessors and massive sovereign AI deals being struck, things still look excellent. 10% Q/Q growth for the data center and a 200%+ 2-year demand CAGR for that segment says it all.

But how do we know when things will eventually slow down? What would cause that? In my mind – three things to focus on. First, any challenge to its large tech lead would diminish its near-monopoly hold on the GPU market. Many bright analysts in the space seem to think AMD’s newest GPUs will rival Nvidia’s. I’ve been hearing that for over a year and also hearing AMD pounding their chests about catching up to Nvidia as well. The issue? They’re catching up to the chips that Nvidia is already upgrading with massive performance gains. It is very hard to catch the leader when they’re debuting new platforms every 12 months that boast giant leaps in power and efficiency. If anyone can catch Nvidia, it’s probably AMD. But? Nobody has done it. That’s why Nvidia is fetching a 60%+ EBIT margin right now. Tech leads inherently foster pricing power and Nvidia’s pricing power remains abundant. So that part of the runway equation looks very good.

The second piece is how much hardware improvement Nvidia has left in the tank. It needs to keep delivering large boosts in productivity with every single new chip and switch. That’s how it can debut hardware that is better-suited for newer reasoning and agentic models. And? That’s the only reason large customers will remain motivated to keep shelling out tens of billions of dollars for every new platform Nvidia launches. For context, Microsoft is using 5X more tokens per quarter on inference. Driving chip upgrades is how Nvidia will keep that pattern humming.

If Nvidia keeps exponentially improving its tech for more advanced models and use cases, clients really are left with no choice but to buy. If Amazon is running cloud workloads on much better chips than Google Cloud or Microsoft Azure, that’s a massive advantage that the other two won’t complacently accept. If Amazon is running these same workloads on slightly better chips than Google or Microsoft, there’s naturally a lower sense of urgency for the others to spend. Slower improvement would incentivize others to just go with what they already have (and pocketing that extra CapEx).

This performance improvement piece of the demand runway also looks very good. Blackwell GPUs offer 30x token throughput gains vs. older Hopper GPUs on Meta’s Llama 3 model. It also tripled performance per GPU and, with the help of NVLink 72, 9Xed GPU linking on Meta’s popular model. And generally speaking, Blackwell averages 40x speed and throughput gains vs. Hopper for a typical client. That certainly doesn’t sound like pace of improvement is grinding to a halt.

“We remain committed to our annual product cadence with our road map extending through 2028 tightly aligned with the multiple year planning cycles of our customers.”

CFO Colette Kress

The three model scaling laws all have plenty of room to keep improving and fostering better chip performance. Even pre-training processes, which entail adding more data to models, have a ton of upgrading left to do. And for post-training (retraining models with reinforcement learning) and inference time scaling and reasoning (making models think harder when desired answers are more complex), both scaling laws remain chock-full of opportunity.

The final piece is tightly tied to the second piece. It's return on investment. If companies (mainly hyperscalers) are enjoying sub-one year payback periods on their infrastructure spend, of course they will keep investing heavily into the opportunity. As of last quarter, $1 spent was netting $1.25 per year in revenue to foster those required excellent returns. Companies cannot embrace modern app or data architecture without embracing AI infrastructure first. And? They cannot embrace AI infrastructure with the efficiency needed (to avoid rampant compute inflation) without Nvidia. That’s why hyperscalers are so easily renting purchased GPU capacity at excellent margin.

These are the three things to focus on for bulls. Every chip boom has proven to be cyclical. While this cycle is far larger than any other we’ve had, its revenue opportunity isn’t unlimited. As of May 2025, all three variables that factor into the unknown demand runway look very good.

“Global demand for NVIDIA’s AI infrastructure is incredibly strong. AI inference token generation has surged tenfold in just one year; as AI agents become mainstream, the demand for AI computing will accelerate.”

Founder/CEO Jensen Huang

Data Center Footprint Partnerships & Sovereign AI News:

As discussed during the quarter, Nvidia will build AI factories in Texas and Arizona to manufacture Blackwell (and eventually Rubin) Supercomputers. It’s also working with Foxconn and the Taiwanese Government on a new AI supercomputer.

And as highly publicized, it partnered with the Kingdom of Saudi Arabia to build AI factories in that nation, as well as with the UAE to build a 5 gigawatt AI cluster in Abu Dhabi. It has a “line of sight to building tens of gigawatts in the not too distant future.” That bodes very well for the runway longevity debate already discussed. The UAE deal features OpenAI, Oracle, Cisco and SoftBank partnerships.

Countries across Asia and Europe are now urgently racing to deploy next-gen AI infrastructure, and Nvidia is by far the best candidate to help them do that. Sovereign has emerged as a “new growth engine,” with many more deals coming (per Jensen).

- The company added expanded partnerships with Alphabet to augment physical AI/robotics and drug discovery pace.

- Will open a new research center in Japan, which will “host the world’s largest quantum research supercomputer.”

Data Center Compute Product Updates:

In its perpetual quest to always be improving GPU performance as client needs evolve, Nvidia has already extracted 50% higher overall performance for its Blackwell GPUs through these software-level optimizations (in just 30 days). They expect that to continue, with the help of Blackwell Ultra and Dynamo. Speaking of which:

Blackwell Ultra is an update to the Blackwell platform. Per Nvidia’s investor materials, it comes with more compute scalability, large inference performance gains and is meant for the most complex agentic and physical AI use cases. It also released Blackwell Dynamo, which is essentially a batch of software optimizations to make its GPUs more efficient for goal-oriented agentic tasks. This is already enabling 30x throughput gains for Deepseek’s R1 reasoning models vs. pre-Dynamo Blackwell. Capital One is using Dynamo to reduce output token latency by 80%. Anything it can do to extract more hardware efficiency from the software side of its business means more differentiation vs. less vertically integrated competition.

As CFO Colette rightfully said, this is why a fully integrated and world-class software arm to complement its GPUs is so important. It means Nvidia can launch unmatched hardware and then make it even more unmatched through this layer of innovation.

I think its NVLink Fusion launch is also worth highlighting. This allows companies to build “semi-custom” AI infrastructure with Nvidia and its integration ecosystem. GPUs are general-purpose in nature. They’re not granularly designed for every single niche use case like an Application-Specific Integrated Circuit (ASIC). This can help Nvidia capture more of that demand by pairing with Marvell and a few other partners and more easily emulate purpose-built hardware.

Data Center Networking & Supercomputer System Updates:

On the networking side, it launched its Spectrum-X and Quantum-X networking switches with silicon photonics. I had to look this up. This type of switch uses light-based and electricity-based components onto a single chip package, which means more compute density, more GPU connections and lower latency. Makes sense. To quantify this, power efficiency is up 250% with this approach, network resiliency is up 1,000% and customer time to market improved by 30%.

Perhaps most importantly, its Grace-Blackwell (GB) NVLink 72 Supercomputer entered full-scale production on schedule. It’s delivering far lower cost per inference and large manufacturing yield gains. Noticing a theme? Improvements are expected to keep rapidly rising and contributing to Blackwell being NVDA’s strongest product launch ever. The new GB 300 system (which uses Blackwell Ultra) should deliver another 50% boost to inference density and memory vs. GB 200. Notably, GB300 architecture is quite similar to GB200, which means lower-friction adoption and lower transition risk.

“NVLink is a new growth vector and is off to a great start with Q1 shipments exceeding a billion dollars.”

CFO Colette Kress

- SpectrumX added Meta and Alphabet as new customers this quarter.

- Launched its latest full-service Supercomputer system called DGX SuperPOD. This is built for the agentic era.

- And in a mission to get clients using more of its products to foster the inherent retention boosts that entails, it launched the Nvidia AI Data platform. It offers tightly integrated storage products from partners like Dell, IBM, Nutanix etc.

- SpectrumX is now at an $8B revenue run rate. It was supposed to ramp to a “multi-billion” business this year. $8B is quite the “multi-billion” result.

Singapore:

There has been some controversy about Singapore vastly over indexing in terms of chip orders per GDP. This is because most of its large clients “use Singapore for centralized invoicing.” 99% of Singapore billings were for U.S.-based orders. Great to hear.

Quick Notes on NeMo (already defined) Driving Great Outcomes:

- Cisco improved model accuracy by 40% with NeMo.

- Nasdaq enjoyed 30% model response accuracy and latency improvements.

- Shell boosted model response accuracy by 30%.

- A new “parallelism technique” for NeMo reduced average model training time by 20%.

- Its Llama Nemotron model (built with Meta’s open-sourced Llama models) boosts response accuracy by 20% and inference speed by 5X.

Quick Notes on Other Revenue Segments:

Gaming and AI PCs benefitted from improving supply conditions. It expects that to keep playing out next quarter. Its developer platform (GeForce) now has the “largest footprint of PC developers.” It launched new Blackwell-featured RTX laptops that “double frame rate and slash latency.”

- Launched new RTX (its product suite for advanced graphics) servers purpose-built to help companies running on legacy infrastructure technology stacks to modernize.

- The Nintendo Switch (which leans heavily on Nvidia) has shipped 150M units, “making it one of the most successful gaming systems in history.”

In Professional Visualization, tariffs hurt demand a bit during the quarter. Still, Omniverse (its product enabling affordable and scalable digital twin creation), is saving Taiwan Semi “months of work” and accelerating Foxconn thermal simulations by 150X. Pegatron is using Omniverse to lower assembly line defect rates by 67%.

In Automotive and Physical AI, Uber, Boston Dynamics and several more companies are using its Isaac Groot model for humanoid robot app developing and other physical AI use cases. It also officially released Cosmos, which is its world foundational model with an intimate understanding of laws of science for Physical AI apps.

- Broadly launched its Nvidia Halos autonomous driver safety system.

- Collaborating with General Motors on their next-gen cars. They’ll be using Omniverse and Isaac Groot.

h. Take

Excellent quarter despite the China headwinds. The guidance is stellar when considering the revenue hit from H20 export bans is $3B larger than expected. This just goes to show how strong momentum remains for the rest of its business. As long as this cycle lasts, it is Nvidia’s world and we’re just living in it. Jensen is a superstar; this company is iconic; this quarter was again great. The beats were smaller than we’ve gotten used to, but that’s inevitable as Nvidia trains sell-siders to expect explosive upside every quarter. Eventually estimates naturally catch up. And regardless, the results they’re putting up at this scale are simply bonkers.