This article contains full reviews of Nvidia, CrowdStrike & MongoDB earnings.

Housekeeping:

- A favor request – please respond to this send with a one-word email. This will help greatly with deliverability on the new platform. Thank you.

- Content tagging in the categories on the new site is currently a mess. I'm going to need to manually do it myself when I can find the time to make sure it's perfect. If you're struggling to find an article in the archive, please reach out and we'll help.

- If you responded to an email or reached out to customer service with a question since the new site launched, I did not receive it. Settings were mismarked and have since been fixed. If you're still having issues with anything, please reach out! We're here to help. Apologies for the inconvenience.

- If you're not getting emails successfully delivered to your inbox, there's a very good chance they're going to your "promotion" folder. Adding this email address to your contacts or safe-sender list will fix that.

- If you're still having any issues at all, please let us know. We will help!

- Max subs – the new Discord room is part of your existing subscription! It's where I field questions, share real-time news alerts and invite you all to collaborate. Sign up (must be Max sub to join)! Instructions can be found here.

- The Snowflake snapshot was sent in the Discord room. That review will be emailed this week. Headline numbers looked really good

In case you missed it from this earnings season:

- Nu & Airbnb earnings reviews

- Cava & On Running earnings reviews

- Datadog & Sea Limited Earnings Reviews (sections 1 & 2)

- Palo Alto & Spotify Earnings Reviews (sections 1 & 2)

- AMD Earnings Review (section 4)

- Trade Desk, Duolingo & DraftKings earnings reviews

- Uber & Shopify earnings reviews

- Lemonade, Hims & Coupang Earnings Reviews

- Mercado Libre & Palantir Earnings Reviews

- Amazon & Microsoft Earnings Reviews

- Meta & Robinhood Earnings Reviews

- SoFi & PayPal Earnings Reviews

- Alphabet & Tesla Earnings Reviews

- Chipotle Earnings Review.

- ServiceNow Earnings Review

- Netflix & Taiwan Semi Earnings Reviews

- Starbucks & Apple Earnings Reviews

& my current portfolio/performance.

1. MongoDB (MDB) – Earnings Review

a. MongoDB 101

MongoDB is a key player in data storage and analytics, with a document-oriented setup. This differs from legacy relational-style databases and next-gen versions like Snowflake’s. How so? Relational databases store data in rigid rows and columns linked by pre-set relationships. These databases look like giant Excel spreadsheets and use structured query language (SQL) to work. The datasets are fixed, with formatting and filtering more limited.

Per leadership, relational databases cannot seamlessly handle unstructured data like MongoDB’s “not only SQL” (NoSQL) data lake can. MDB's Document-oriented set-up can organize and use unstructured data is better in this regard, which is vital in the age of GenAI and agentic AI. Relational and SQL are fine for traditional machine learning, but less effective for agentic, multi-step, goal-oriented tasks. It’s worth noting that Snowflake and Databricks would argue that their modern relational databases have been retrofitted to handle scalable unstructured and semi-structured data ingestion, but MongoDB leading in this area is a commonly held opinion.

- MongoDB’s NoSQL document-oriented database is called Atlas.

- The newest version of its database software that powers Atlas is called MongoDB 8.1. This offers 20% to 60% performance boosts vs. the old version and better time series (timeline-based) data services.

Atlas’s cloud-native database architecture uses a group of servers (or a cluster) to actually store data for eventual product creation within its platform. The nature of MongoDB’s Atlas product allows clusters to be easily added to or subtracted from for seamless tweaking as needs fluctuate. It also offers MongoDB Search for data querying and MongoDB Data Lake for housing unstructured data for querying and analytics.

More Core Atlas Products:

- Atlas App Services is a server-less toolkit for developers to help them build modern apps.

- Vector Search allows clients to seamlessly scrape insights from data. It allows for theme-based querying rather than just word-based. It also provides retrieval-augmented generation (RAG). This pushes “semantic” search results into associated large language models (LLMs) to uplift querying precision.

- Atlas Stream Processing allows for real-time data ingestion. That matters a lot for app developers who constantly toy with, split-test and render every single little detail within their apps. Real-time access to data querying helps make that process painless.

- Atlas Search Nodes (nodes = servers) automate the optimal usage of compute capacity and separate database & search functions to enable easier, more affordable scaling.

Product Expansion & AI:

MongoDB thinks it’s great at automating the cumbersome data modernization work needed for migrations. Its Relational Migrator (RM) handles and takes client headache out of this process. Now, it’s focused on leveraging RM to let companies actively re-code their legacy applications and create more of an end-to-end, full-service migration service. They call this the “modernization factory.” With this holistic strategy, MongoDB doesn’t just aid with data migration, but also direct automation of software modernization to seamlessly run apps through its document-oriented foundation.

Its recent purchase of Voyage AI provides another product expansion opportunity. Voyage is a key player in GenAI and agentic AI model trustworthiness. While models routinely create delightful, jaw-dropping experiences, they’re also wrong a lot. Hallucination rates (wrong answer rates) can often reach 25%, making models really only useful when you know what a right answer should look like and are double-checking. That limitation puts a tight ceiling on utility and is what Voyage aims to fix. It has two products. First, is its set of Vector Embedding Models. As leadership puts it, these are the “bridge between models and a client's private data.” They allow for meticulous information transfer into models. It organizes data as a number sequence, more closely grouping data points in a much more standardized manner. All of this helps with machine learning accuracy rates and uncovering patterns to reduce model mistakes. Secondly, it offers reranking models. These ingest and reorganize materials based on data relevance for a given model input. They sift through all queried information to eliminate waste and inaccuracies. With Voyage, MDB gains a broader suite of GenAI services to round out a more cohesive platform.

- MDB will most meaningfully benefit from AI app monetization, which comes after infrastructure. Anything they can do (like RM, MAAP and Voyage) to expedite client adoption is good for their core business… while creating more revenue opportunities.

- Voyage released two new retrieval models with better model accuracy and price performance. Voyage 3.5 is the firm’s latest embedding model, which lowers data storage costs by over 80%.

- MongoDB launched its own Model Context Protocol (MCP) service with Cursor, GitHub, Anthropic and other needed 3rd-party connections.

- MongoDB AI Applications Program (MAAP) offers an environment, a series of templates, integrations and guardrails and 3rd-party integrations to diminish GenAI app creation friction.

- This is more of a professional service and support product for helping clients build apps. Atlas App Services is more of a self-service product for developers to build modern apps themselves.

In summary, it now has Atlas, significant help for app and data modernization, real-time data streaming, world-class search tools and highly-regarded embedding and reranking models under one roof.

Non-Atlas Business:

Enterprise Advanced (EA) refers to its on-premise (Atlas is cloud-based), database and app bundle. It allows companies to purchase licensing for subscription-based usage (rather than paying for consumption under Atlas).

Business Model:

Note that most of MongoDB’s growth is based on acquiring new customers and migrating their workloads onto the platform, as well as consumption-based revenue from customers using its data products and growing workloads.

b. Key Points

- Document-oriented product niche is resonating in the age of AI.

- Rounding out the product suite with compelling expansions.

- Outperformance was partially driven by the higher-quality Atlas revenue bucket.

- Go-to-market changes are working.

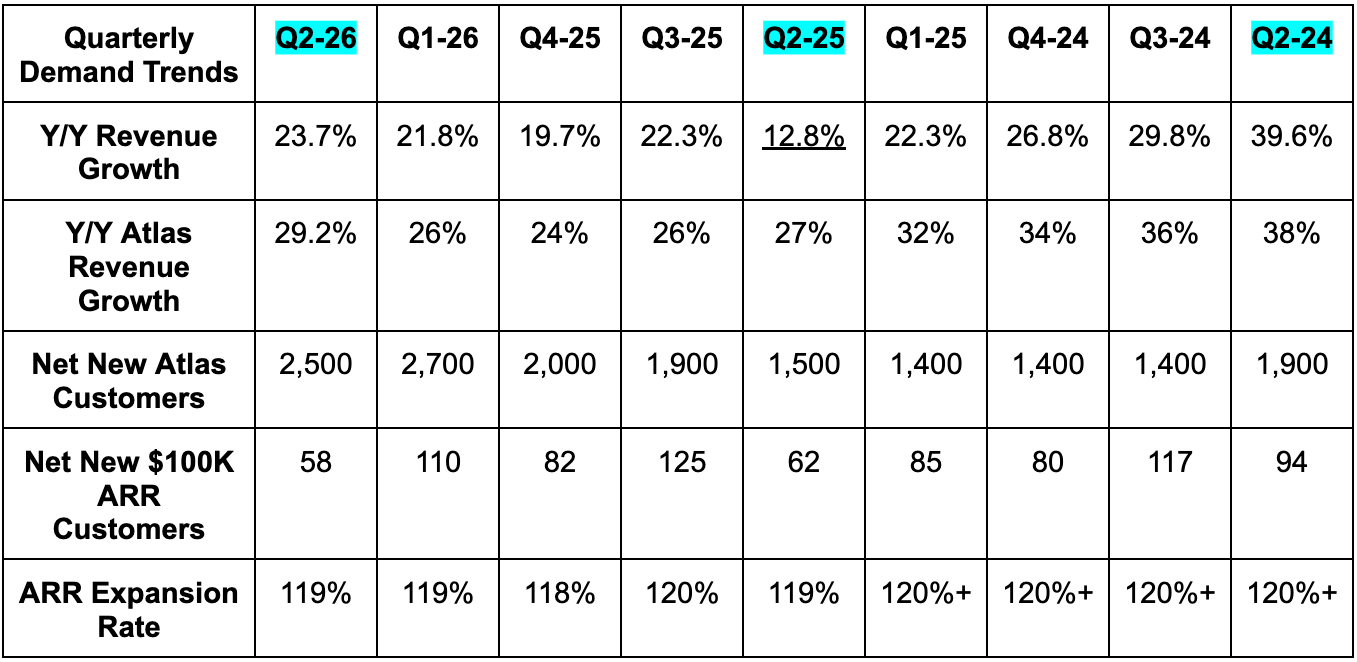

c. Demand

- Beat revenue estimates by 7.4% & beat guidance by 6.8%.

- Its 18.1% 2-year revenue compounded annual growth rate (CAGR) compares to 23.2% last quarter and 26% two quarters ago.

- Fastest rate of Y/Y revenue growth in 6 quarters (helped by easy Y/Y comps).

- Beat Atlas revenue estimates by 4%.

- This is by far the highest-quality MDB revenue. Especially good to see this segment outperform.

- Net new Atlas revenue was its highest in over a year.

- Non-Atlas revenue (just revenue - Atlas revenue) actually rose by 10% Y/Y vs. expectations of a nearly 10% Y/Y decline. More on this later.

- Non-Atlas ARR rose by 7% Y/Y.

- Slightly missed $100K+ ARR client estimates by 0.3% of 7 clients.

- Beat billings estimates by 7%.

On customer growth, of the 2,500 Atlas clients it added this quarter, 300 came from Voyage AI M&A.

MDB’s non-Atlas revenue is very high margin business. It’s also unpredictable, considering it comes from multi-year contracts subject to hefty closure and timing uncertainty. Because of this and the consumption-based nature of MDB’s business, it is among the most aggressive guidance sandbaggers out there. And rightfully so when your business trends aren’t solely driven by highly visible and pre-set subscription rates. Like it should, it bakes significant pessimism into forward guidance to leave potential upside risk to results. When the worst-case scenario doesn't play out, it outperforms on revenue. And based on the sky-high margin nature of its non-Atlas business, when that segment outperforms on demand, the profit commensurate outperformance (as you’ll see below) is massive.

For Atlas, great consumption during the month of May (cited on the last call) powered the quarterly beat. This strength was especially pronounced in the all-important USA market, which is where go-to-market (GTM) changes (more later) occurred. That’s evidence of these changes working. For non-Atlas, revenue vastly exceeded expectations due to outperformance in multi-year deal closings. That bodes well for the on-premise demand runway, as some customers with especially sensitive data and apps continue to embrace a hybrid infrastructure approach. Atlas revenue is the more important bucket, but this is still encouraging. If you recall, MDB offered disappointing initial FY 2026 guidance. This was because of a large non-Atlas headwind via lapping abnormally successful years of growth. 2026 is going much better than expected in this area, offering more evidence that this team loves to under-promise and over-deliver. Importantly, guidance continues to assume this headwind persists. It was updated to reflect the successful quarter, but Q3 and Q4 expectations remain unchanged. That likely positions them for more outperformance. Specifically, the annual headwind is now expected to be $40M vs. $50M previously.

Neither Atlas nor non-Atlas enjoyed aggressive demand pull-forwards or any one-off event that propped up results. The beats were mainly structural in nature. Non-Atlas helped the beat more than Atlas, but Atlas still materially contributed.

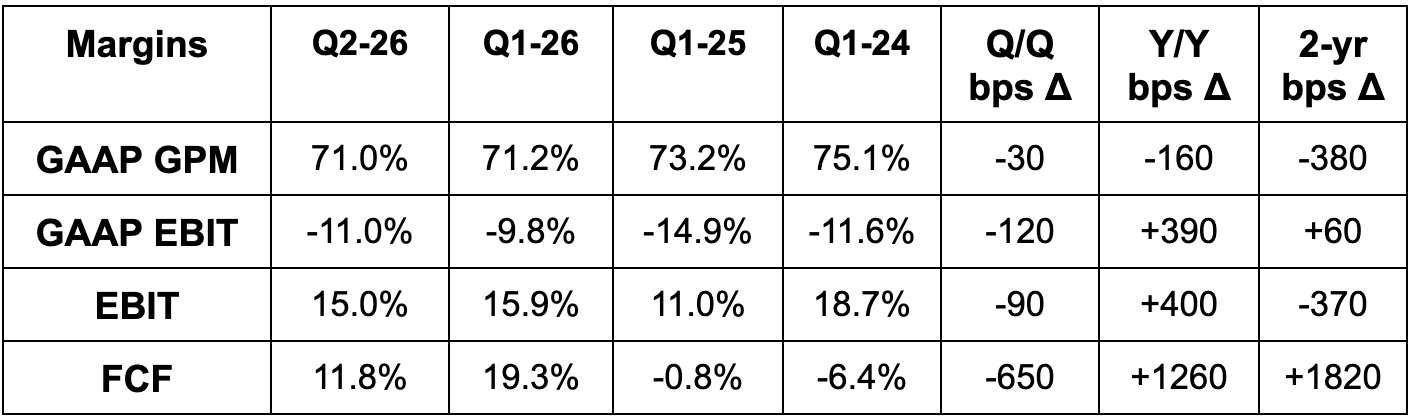

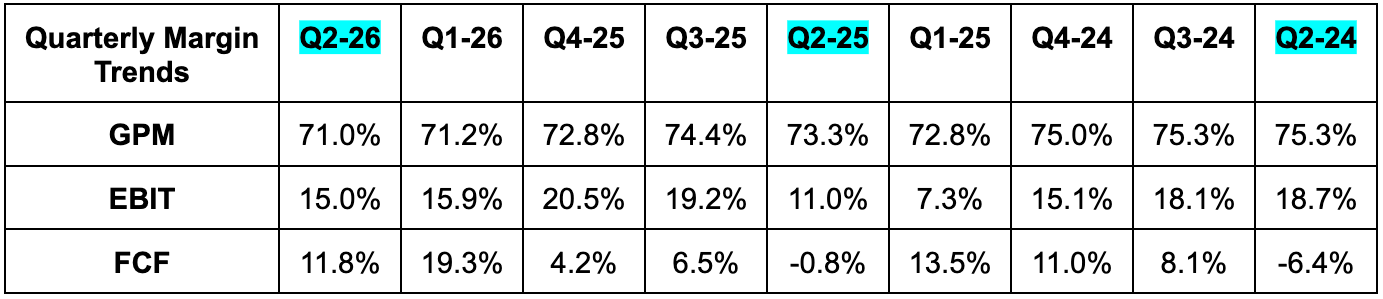

d. Profits & Margins

- Missed 74.5% GPM estimate by 70 bps.

- Outsized Atlas growth is a GPM headwind vs. their higher-margin licensing and on-premise business.

- Atlas was 74% of revenue vs. 63% two years ago.

- Beat EBIT estimate by 51% & beat guidance by 52%.

- Beat $0.66 EPS estimate by $0.42 & beat guidance by $0.44.

- EPS rose by 43% Y/Y.

- Free cash flow (FCF) rose from -$4M to $70M Y/Y.

e. Balance Sheet

- $2.3B cash & equivalents.

- No debt.

- 10% Y/Y share dilution. This includes $200M in buybacks and Voyage AI M&A.

f. Guidance & Valuation

- Raised annual revenue guidance by 3.5%, which beat by 2.7%.

- Revenue raise was $70M vs. the $38M Q2 outperformance, implying rising 2nd-half-of-year expectations.

- Raised annual EBIT guidance by 17.5%, which beat by 16%.

- EBIT raise was $44M vs. the $28M Q2 outperformance, implying rising rest-of-year expectations (specifically stronger Q4 expectations considering Q3 EBIT guidance was roughly in line).

- Raised annual $3.03 EPS guidance by $0.65, which beat by $0.59.

- Raised implied annual low-20% Atlas revenue growth target offered at the start of the fiscal year to a mid-20% growth target.

- Raised non-Atlas revenue growth guidance from nearly -10% Y/Y to about -5% Y/Y.

For Q3, revenue guidance was modestly ahead of expectations, EBIT was in line and EPS was $0.78 vs. $0.73 expected. They expect non-Atlas revenue growth to be roughly -20% Y/Y as it laps fantastic multi-year contract performance during Q3 2025. That will lead to EBIT margin falling Q/Q.

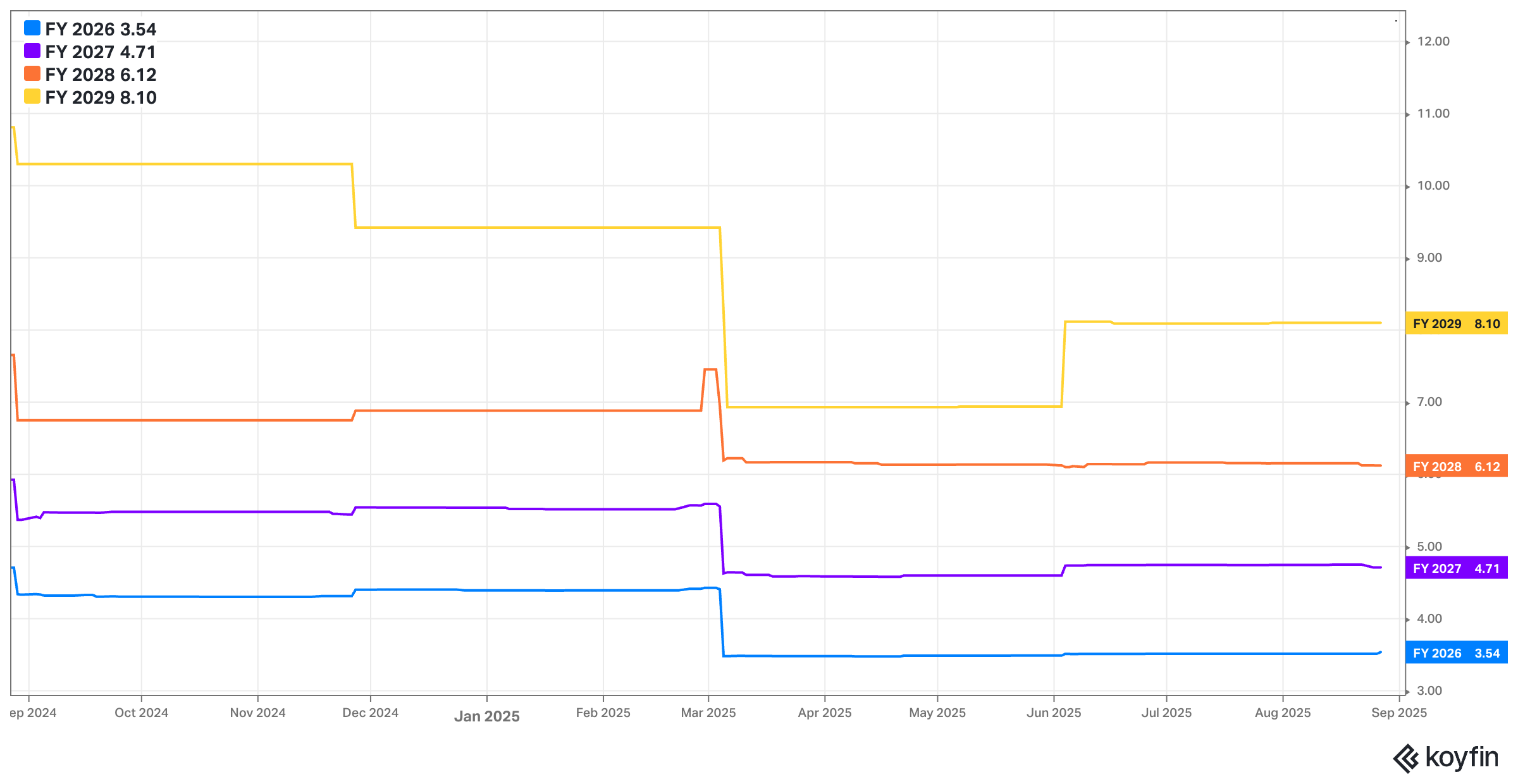

MDB trades for 79x forward EPS. EPS is expected to grow by 1% this year, 15% next year and 26% the following year.

g. Call Highlights

Why MongoDB is Winning & Well-Positioned:

The bulk of CEO Dev Ittycheria’s prepared remarks were spent on the 4 reasons why MDB had a great quarter and expects to have many more great quarters going forward. We’ll walk through those 4 items (which have considerable overlap) here.

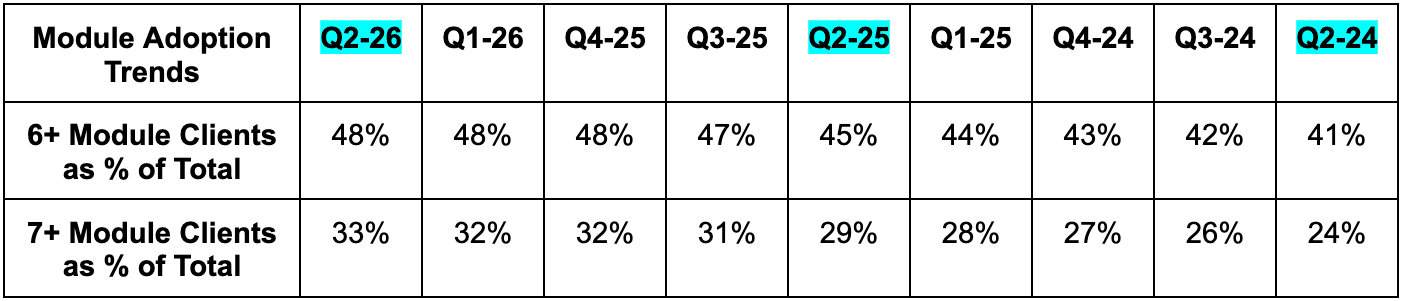

First, MongoDB seamlessly scales with the big boys in a way that yields better up-time, security, scalability and overall performance. Atlas and its on-premise business are both capable of ingesting data and massive scale without any bottlenecks. That’s why it has 70% of the Fortune 500, 14 of the 15 largest healthcare firms and 9 of the 10 largest manufacturers in its customer base. These logos rely on massive scalability with optimal efficiency to ensure they can meet profit expectations quarter after quarter. MDB is a great partner in this pursuit.

Second, as the 101 section mentioned, document-oriented is best for the “most mission-critical and transaction-intensive applications.” It’s perfectly capable of conducting full asset transactions containing many different documents and so more complexity. As an aside, this is why both Snowflake and Databricks have added JSON-powered Postgres products to better support online transaction processing (OLTP), which is already a core competency for MDB.

- JavaScript Object Notation (JSON): Open-source format for data connections and exchanges. MongoDB heavily leans on JSON to power its NoSQL database services. When customers use Atlas, they’re probably using JSON-formatted data. MDB will then convert JSON files into Binary JSON (BSON) (which MongoDB created) for its internal storage and performance optimizations.

- MongoDB views Databricks and Snowflake (both relational players) adding OLTP support via M&A is evidence of how hard this is to build internally, and also evidence of MDB’s core niche being extremely compelling. They see their OLTP capabilities as best-in-class and the “Lake Base” launch from Databricks does not change that opinion at all.

- This transaction-intensive skill is why it has 90 Atlas customers with over 60 million customer records stores and is how it helped Deutsche Telekom 15Xed login capacity with better resiliency and customer engagement.

Third, they’re quickly rounding the product suite in pursuit of being a true platform play. They don’t just offer data storage. To actually create value from scalable data ingestion and organization, vector search, stream processing and Atlas Search Nodes all provide incremental utility vs. point solutions. From there, it ensures data and workloads can be seamlessly migrated and modernized via Relational Migrator and that it effectively vets and places that data into models to minimize inaccurate response rates. That’s so important in a world where 95% of AI spend still comes with negative return on investment (ROI). A lot of that is because answers are so frequently wrong and unreliable.

With all of this higher fidelity data usable, it also provides everything a company needs to build apps for the AI era. A true platform play… which is powering larger deals, better retention, broader differentiation and this successful quarter. It frees developers to shed tedious task requirements and “spend less time stitching together disparate systems." And for a company like Agibank in Brazil, it’s the end-to-end value creation that cut costs by 90% and boosted tech stack performance by 400%.

- PostgreSQL (Postgres): Popular, open-source SQL database framework that hyperscalers and several other data vendors are using more frequently.

- Leadership thinks companies picking Postgres over their JSON-based offering are doing so because they don’t know how much better JSON is. It's about awareness, not functionality. Customers routinely pick Postgres, run into performance bottlenecks and migrate to MDB.

The fourth and final point ties closely to the first 3. It entails leadership’s belief that they’re “emerging as the standard for AI applications.” That’s thanks to all the other product value and architecture we’ve already discussed. And fortunately, the AI app layer monetization journey remains in its infancy. The vast majority of financial value creation is still on the infrastructure side, and app monetization will surely follow… timing uncertain.

For now, they’re seeing customers experimenting with productivity tools for coding, document summarization, customer service etc. And early on, there’s more momentum on the self-serve side from AI-native startups vs. large enterprises thus far. That’s understandable; start-ups move more quickly. It talked about an electric vehicle platform using Atlas for their AV program and DevRev (private AI-native company) using it to pocket large efficiency gains. Traction is “real but early.” It also briefly mentioned “one of the fastest-growing startups in the Bay Area has bet big on MDB.”

More on AI:

The contribution from AI-native customers is still immaterial to MDB’s results. Outperformance was driven by core business momentum, as customers still haven’t gotten to the app transformation phases of their businesses.

- Launched new Voyage context & reranking models.

- Added a new LangChain (AI-native firm) partnership.

- Added Temporal, LangChain and Galileo (not SoFi’s Galileo) to its AI partner roster.

Public Sector Focus:

MongoDB is pursuing FedRAMP High Impact Level 5 (IL5) authorization to accelerate public sector momentum. That has been a key unlock for other enterprise software firms operating in different categories, such as CrowdStrike. The same should be true here. In other positive government news, it was also added to the U.S. Intelligence Community portion of AWS’s marketplace.

Improving Go-To-Market:

As a reminder, MongoDB overhauled its go-to-market a few quarters ago. They moved resources from mid-market direct sales to large enterprises, as ROI there was higher. They pivoted to mid-market demand being addressed via self-serve channels and implemented a managed and self-serve go-to-market hybrid for customers wanting more freedom to build, without needing to handle back-end maintenance. That has been extremely popular and is leading to direct sales growth moving to self-serve. Next, they tweaked sales incentives to prioritize key performance indicator health. Finally, they’ve gotten louder and more proactive in messaging the value-proposition of the product suite. This quarter, that included a series of global developer events, with “hands-on” consulting to inspire product creation. It also includes more active pursuit of SQL developers unfamiliar with MDB’s products. Leadership thinks the excellent quarter is evidence of these go-to-market alterations being well-placed.

h. Take

Excellent quarter that validates the team’s confidence in their AI app layer positioning. While lumpy non-Atlas revenue outperformance did help, the beats were also driven by structural Atlas demand tailwinds and point to a sizable runway ahead. Voyage AI is the perfect complement to this product suite and drives incremental differentiation. I also think that + MAAP does make this a real platform play in enterprise software. I don’t believe that it’s fair to say relational database vendors like SNOW aren’t set to effectively compete for AI workloads, but I do think it’s safe to say that MDB and its differing foundation clearly are. The valuation is a bit stretched following today’s move, but the quarter warrants a larger premium than the company enjoyed before the report. Bulls should be very pleased.

2. CrowdStrike (CRWD) Earnings Review

a. CrowdStrike 101

CrowdStrike is a cloud-native endpoint cybersecurity company. It competes directly with SentinelOne, Microsoft Defender and Palo Alto. Its bread-and-butter is called endpoint detection and response (EDR), which replaces legacy anti-virus (AV). Beyond EDR, it offers applications in cloud security, log management, forensics, identity, data protection etc. to round out its “Falcon Platform.” Falcon’s edge is its ability to digest near-endless amounts of data to automate and improve breach protection. CrowdStrike uses its large and diverse dataset to constantly update Falcon’s efficacy and use cases… all with a single console and single agent to ensure superior interoperability. It can recycle this same data repeatedly, efficiently developing new products for a single interface. More utility without adding complexity or cost. More margin-accretive cross-selling too.

Important Endpoint Security Acronyms:

- Endpoint detection and response (EDR) provides end-to-end visibility, constant monitoring and full protection of endpoints (like an iPhone). It unveils, prioritizes and responds to threats.

- Managed detection and response (MDR) encompasses CrowdStrike’s team of threat hunters, which augment EDR with human touch when needed.

- Extended detection and response (XDR) is EDR with 3rd-party, non-endpoint data sources infused. The incremental data sharpens breach protection and extends it beyond the endpoint.

Important Cloud Security Acronyms (alphabet soup, I know):

- Cloud Security & Posture Management (CSPM) tells you about your vulnerabilities and misconfigurations.

- Cloud Infrastructure Entitlement Management (CIEM) indicates who is entering a software environment. It advises if these entrants are allowed and exactly what they can do.

- Cloud Workload Protection (CWP) is a preventative measure to observe if anything bad is being done by entrants. This sounds alarm bells while preventing and remediating cloud infrastructure attacks. It’s closely related to CSPM and CIEM.

- Application Security Posture Management (ASPM) locates and facilitates the safe control of cloud apps.

- Cloud Native Application Protection Platform (CNAPP) is the overall suite tying all of these cloud products together.

AI:

In the realm of GenAI, Charlotte AI is CrowdStrike’s security copilot. It levels up the capabilities of security analysts by actively detecting anomalies, orchestrating remediation and fixing issues in an automated, precisely-triaged fashion. It’s a force multiplier for efficiency gains in a world where most companies are starved for more security resources and talent. All of this pushes beginner-level security analysts to much higher levels of capability.

- Charlotte AI Agentic Response automates analysts’ trouble-shooting, expediting the identification of the source of breaches, “maps lateral threat movement” (key in a zero trust world) and also offers ideal next steps for fixing issues. This is plugging right into its security operations center (SOC) (more later).

- Launched new AI Agentic Workflows this year. They pull from CrowdStrike models and world-class 3rd-party reasoning models to make these products far more actionable.

- CrowdStrike sees the explosion of AI agents as creating another massive asset class needing protection. It is quickly building out the product suite with this in mind.

Falcon Flex:

Falcon Flex is CrowdStrike’s selling program, which bolsters customer “flex”ibility over product purchases. It allows clients to pay for only the modules they need, as they need them. There are no pre-set commitments and no mandated usage; they can run through credits at their leisure. This will be the firm’s main go-to-market strategy going forward, as it has shown to lower cross-selling friction, raise deal size and create stickier customers. With this, customers can use exactly what they want, when they want, which lowers timing and headache-related issues associated with cross-selling new products.

Important Log Management Ideas & Becoming the Security Operations Center (SOC):

CrowdStrike’s ability to ingest, use and recycle high-fidelity security data is vital for every product it offers. This is how it became the de facto “Security Operations Center,” (SOC) as SOC status requires a holistic view of assets and brings complete hygiene under one vendor (great for interoperability & efficacy).

Within SOC, EDR is the data foundation that gets the ball rolling. To ensure best usage of this data, Security Information and Event Management (SIEM) is used to aggregate security logs/data and assist organizations in identifying and remediating threats faster. Simply put, this drives faster time to value and cuts customer costs. Falcon Fusion Security Orchestration, Automation and Response (SOAR) is what turns this bird’s-eye view into actionable workflows, fixing issues and proactively preventing them. Finally, its exposure management products ensure proper asset configuration with sound hygiene and minimum, only-necessary access permission. Combining these three tools means a customer can use whatever data they want, generate custom, automated workflows, and ensure these workflows are closely tied to better protection, rather than being detrimental to it. There are two key pieces of exposure management:

- Vulnerability management is what uncovers weak spots in security posture.

- Attack Surface management aggregates all possible ecosystem entrances and consolidates protection for easier coverage.

Generally speaking, SOC and its various components enable CrowdStrike to ingest more data, understand its clients more effectively, recycle data and offer more tools. This effectively uses existing assets to generate more and more products, which is highly margin accretive and sticky. CrowdStrike is rapidly adding agentic capabilities to its SOC (through Charlotte AI) to become an “SOC’s best friend” and to insulate it from competitive threats.

Network & Identity Security Expansion:

CrowdStrike recently introduced its unified Falcon Identity security offering, which combines existing capabilities and “protects every human and non-human identity across the full lifecycle in any environment.” Within identity, it also offers network scanning vulnerability help, as well as Privileged Access Management (PAM) within identity security. These products will mean more direct competition with Zscaler and Palo Alto on the network side, as well as Okta and CyberArk (Palo Alto) on the identity side.

b. July 2024 Outage Reminder (review from last quarter)

Last July, a software update error led to a global outage caused by the company. That fostered considerable blowback against CRWD and the creation of its “Customer Commitment Packages” (CCPs). CCPs offer temporary contractual concessions to customers, such as discounting, extended free trials, comped modules and free professional services help. It’s their apology. CrowdStrike hoped customers would choose more products over extended free trials. Why? Their best-in-class customer service scores and product efficacy (as measured by 3rd-parties) make them exceedingly confident in free modules turning into more paid adoption when CCPs expire later this year.

A few quarters into the CCP program and it is working exactly as planned. Clients are predominantly opting into Falcon modules, positioning the company for easy up-selling as they expire over the next few quarters. This is when the company will finish working through existing CCPs; it ended new CCP issuance last quarter. Two more quarters of impacts.

A lot of the CCP success can be seen within Falcon Flex, which is the mechanism CrowdStrike offered CCPs through. Importantly, a lot of this flex growth is cannibalistic rather than incremental, as existing customers opt into this newer, easier means of consuming modules. So then why does this matter so much? Falcon Flex customers use an average of 9 modules. This compares to just 21% of its overall customer base using 8+ modules. Flex customers land bigger, consume more of Falcon and accelerate CrowdStrike’s push for platform consolidation. It minimizes friction associated with purchasing new modules by allowing customers to simply say they want to add something. CrowdStrike can seamlessly provide it, rather than requiring a formal procurement period. This all supports lofty retention rates.

Customers seem to be loving this means of buying, as most of them are comfortably ahead of consumption plans in their given contracts. That should mean more usage-based revenue and larger deals in the future.

The incentives and extended trials CRWD offered following the outage worked as planned. They have “seeded” their client base with highly popular and sticky tools, which is why the team is gaining more confidence in accelerated ARR growth as we enter FY 2027. At the same time, this means recognized subscription revenue isn’t as positively correlated with ARR as it normally is. That's temporary, as these incentives will go away and recognized revenue will mirror run-rate.

c. demand

- Beat revenue estimates by 1.8% & beat guidance by 2%.

- Its 26.5% 2-year revenue CAGR compares to 29% last quarter and 31.9% 2 quarters ago.

- Slightly beat annual recurring revenue (ARR) estimates by 0.4%.

- Beat net new ARR (NNARR) estimates by 5.8% and beat internal guidance by at least 4.7%.

- Easily met guidance calling for Q/Q NNARR growth with $221M in NNARR vs. $194M Q/Q.

- Record Q2 for NNARR.

- Beat remaining performance obligation (RPO) estimates by 5.9%. This is a great indicator of where future growth rates are heading once we get through CCPs. 47% Y/Y growth is highly encouraging in my opinion. This growth engine is turning into a coiled spring that’s simply waiting for an end of the finite impact of customer concessions following the outage.

- Its total addressable market (TAM) rose 16% Y/Y to $116B. It sees this moving to $250B over the next four years, thanks to AI.

Y/Y revenue growth wasn’t supposed to begin sequential acceleration or Y/Y NNARR growth until next quarter. These accelerations came a quarter early. This was credited to excellent execution and AI tailwinds. AI is helping demand for every category of its business.

As a reminder, CrowdStrike incurs virtually all client costs when it onboards its first module with a new customer. Subsequent model purchases are essentially pure margin. As the trends above remain positive, the margin trends below will too. That’s not currently happening, but will in two quarters when the rest of its CCPs expire.

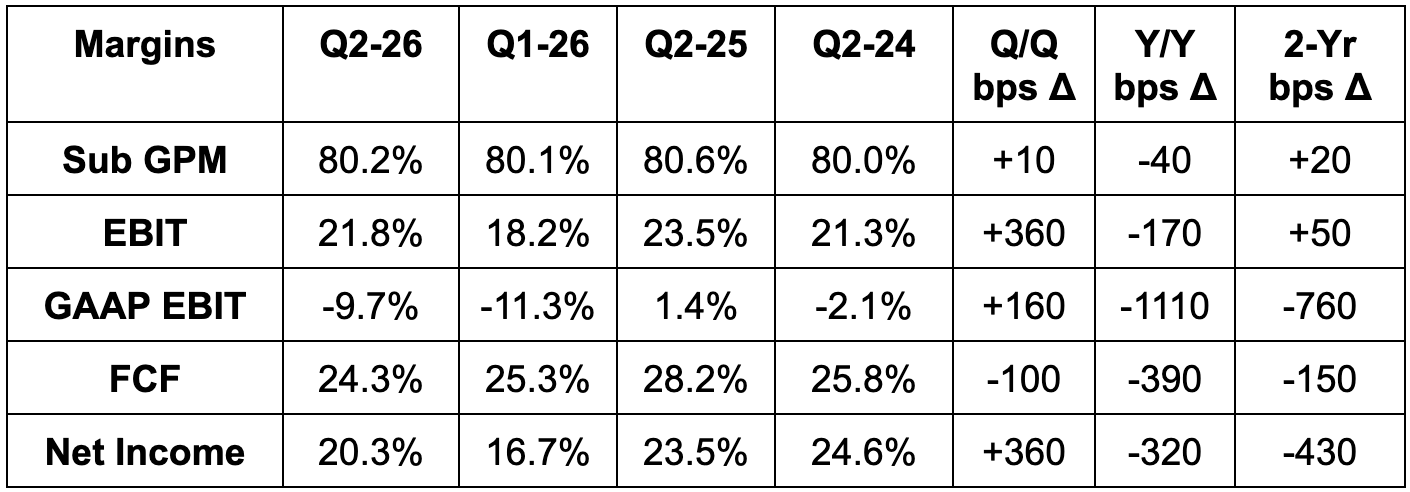

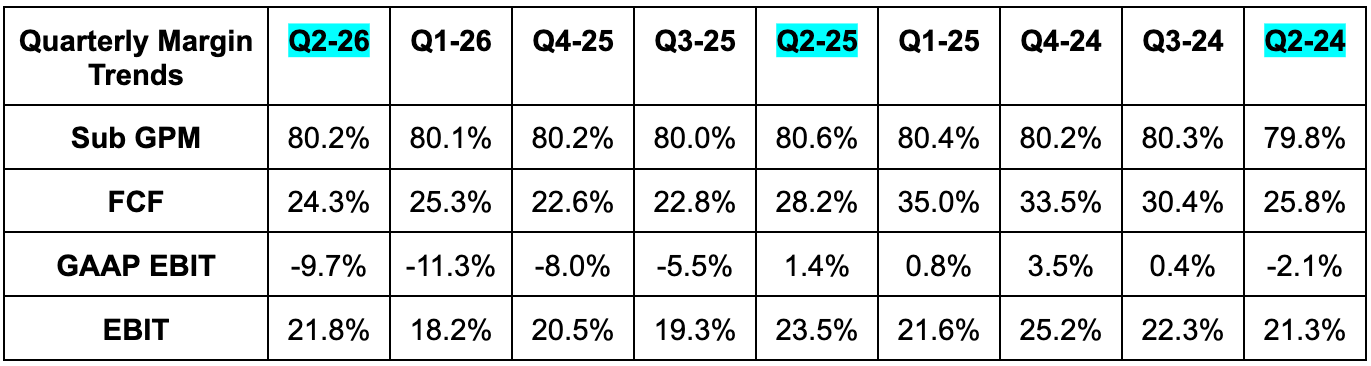

d. Profits & Margins

- Beat EBIT estimate by 10.4% & beat guidance by 10.9%.

- Beat $0.83 EPS estimate & identical guidance by $0.10 each. The beat would have been between $0.03 and $0.04 smaller without some tax favorability from the “Big Beautiful Bill.”

- Beat free cash flow (FCF) estimates by 19%.

GAAP EBIT margin ex-outage would have been -3.3%. FCF margin would have been 26.7% ex-outage.

e. Balance Sheet

- $4.97B in cash & equivalents.

- $744M in debt.

- Diluted shares fell slightly Y/Y.

f. Guidance & Valuation

- Slightly raised full-year revenue guidance, which slightly missed estimates.

- This includes abnormally large prudence for its professional services business, following a standout quarter. That’s an easy way to lower the bar for outperformance in 3 months.

- Raised annual EBIT guidance by 2.9%, which beat estimates by 2.4%.

- Raised annual $3.50 EPS guidance by $0.16, which beat estimates by $0.15.

- Maintained expectations of 40%+ Y/Y NNARR growth during the second half of the fiscal year.

- This represents 22% Y/Y NNARR growth for the full year.

- They’re on track to cross $5B+ in ARR this year, representing 18% Y/Y growth despite large headwinds. This compares to estimates calling for $5.13B. They set a 22% Y/Y NNARR growth target, which implies ending ARR for the year of closer to $5.2B.

CrowdStrike trades for 91x forward EBIT and 77x forward FCF. EBIT is only expected to rise by 18% this year with 10% FCF growth. This is outage-related. Starting next year, EBIT is expected to compound at a 37% 2-year clip, with FCF compounding of 40% over that time.

g. Call & Release Highlights

Just like last quarter, CFO Burt Podbere told investors that he’s increasingly confident in NNARR acceleration through the end of the year and the 22% Y/Y NNARR growth guide. This relies on a much better end of the year, so why are they confident? A few reasons. Flex and AI tailwinds are strong and their pipeline activity is equally strong. That part is structural. But, to reiterate, this is being greatly helped by CCP headwinds beginning to ease. That’s more of an ephemeral tailwind. The majority of that easing will happen as we move from Q4 to Q1 (largest CCP cohorts expiring) which means that the acceleration is more so tied to retention than banking on great new customer growth. Considering retention remained very strong through the global outage, I’m confident in assuming they’re right.

They maintained their 30% EBIT margin, 36% FCF margin and 83.5% subscription GPM targets, as well as their path to $10B in ARR. They continue to expect to exit this year with a 27% FCF margin and deliver a 30%+ FCF margin next year.

CrowdStrike trades for 91x forward EBIT and 77x forward FCF. EBIT is only expected to rise by 18% this year with 10% FCF growth. Again, this is outage-related. Starting next year, EBIT is expected to compound at a 37% 2-year clip, with FCF compounding of 40% over that time.

Flex Thriving & Leading to More Platform-Level Adoption:

The combination of its holistic SOC offering and more malleable model purchasing with Flex is turning out to be a great one. An interoperable, single-agent, end-to-end security offering across endpoint, cloud, identity, data, exposure management and threat intelligence is peanut butter. Flex is jelly. Despite having just 1,000 Flex customers vs. 780 Q/Q, it enjoyed 61 net new re-flexes (using up credits early & coming back for more) in this quarter alone vs. 39 last quarter. 10% of its Flex customers have already re-upped on their commitments. Clearly, customers are exhausting usage far more quickly than expected, which goes to show how useful Falcon is and how powerfully this will fuel growth. Specifically, re-flexing customers from this quarter were just 5 months into multi-year contracts before buying more. Re-flexing customers deliver a 50% ARR boost, helping CRWD enjoy an average ARR per Flex customer of over $1M. All in all, $10M+ customers doubled Y/Y and it crossed 800 customers with $1M+ in ARR.

- For even more clear evidence that Flex is driving this, 60% of Flex customers have 8+ modules vs. under 30% for its full client base.

“Seeing so many customers re-flex validates the flex model and illustrates customers accelerating consolidation with CrowdStrike.” – Founder/CEO George Kurtz

This helps explain why non-endpoint growth is so excellent and why competitive win rates keep rising quarter after quarter. Amazing what happens when you make it easier for your customers to use already elite products. Specifically, cloud ARR rose 35% Y/Y to $700M, identity rose 21% Y/Y to $435M and SIEM rose 95% Y/Y to $430M. Together, these three newer product pillars enjoyed north of 40% Y/Y growth. And now? Enter exposure management as another scaled growth vector to join endpoint, cloud, identity, SIEM and Charlotte AI. This product reached $300M in ARR during the quarter, with a massive runway ahead. Its addition of network vulnerability management a couple quarters ago has been “very well received” and is contributing.

Why are these products doing so well (beyond being part of a world-class suite)? Some of these accolades may help explain things:

- 2025 Gartner Leader for endpoint. Highest rated for both completeness of vision and ability to execute.

- Named a leader in IDC’s CNAPP rankings.

- Named a leader in IDC’s Exposure Management rankings. Great to see.

- Named a SIEM and identity security leader by GigaOm Radar.

- Named as the MDR leader by Frost Radar.

AI Tailwinds Galore:

The theme of Kurtz’s prepared remarks was that AI is driving notable demand accelerations across every business category. Companies are stumbling to safeguard assets from external AI-powered threats, ensure employees are safely using 3rd-party apps, protect data centers, and secure agents. Leadership feels perfectly positioned for its end-to-end security platform to help with all of this, and will lean on its agentic SOC product (Charlotte) to ensure superior autonomy, asset coverage and efficacy. During the quarter, Charlotte grew by more than 85% sequentially. Small base, but fantastic early adoption. That’s probably because of the “immediate ROI” this product delivers, which is quite rare in the AI software today.

“CrowdStrike's role in the agentic era is staying ahead of AI-armed threat actors to secure AI at every layer. This begins with the AI model itself and extends to workloads, identities (including non-human agents), and user devices.” – Founder/CEO George Kurtz

While others like Zscaler and Palo Alto may disagree, CRWD sees endpoints as the perfect base for winning in agentic AI – not networks. Per Kurtz, that’s where the majority of AI adoption will occur. AI security “is not a network program” and its mix of endpoint products, scalable data ingestion and SIEM is a “competitive moat” here. They think they’re better positioned to win a large portion of the AI opportunity than anyone else in the space. And while results don’t match that optimism due to current CCPs, nearly 50% Y/Y RPO growth points to thriving future demand and them being dead right.

In AI product news, it added AI upgrades for Systems Security Assessments and Security Operations (SecOps) posture. A blend of security analysts and autonomous software can give customers a full view of their assets (including AI assets) and simulate attacks to uncover weak spots and proactively strengthen security hygiene.

SIEM M&A:

Today, CRWD announced its acquisition of Onum Technology. Per leadership, the SIEM migration process for CRWD’s customers has been chalk-full of friction. It has been intimidating, clunky and requiring several disparate 3rd-party vendors. Considering SIEM is required to scalably organize data for AI model and app injection, that’s a big obstacle for AI embrace. Onum fixes this, with native data, real-time data telemetry (collecting and organizing from a wide array of sources), streaming and “in-pipeline detection,” meaning data is scanned and vetted before it gets stored within CrowdStrike’s SIEM offering. That’s so important in the agentic AI era that features data racing around the digital economy with limited checks and balances. It’s the wild west right now, and Onum will help CrowdStrike resolve this issue that everyone’s dealing with.

Per the press release, they’re both the filter and the pipeline, meaning they can seamlessly collect, process, analyze, organize and unleash unstructured data… all now natively in the Falcon platform. In essence, this bridges subtle gaps between AI security and 1st and 3rd-party data usage… which (along with great models) is what makes AI security stand out. To me, this will be a welcomed complement to CRWD’s SIEM and its overarching SOC offering. SOC requires clean, high-fidelity data pipelines to actually be useful. CrowdStrike has now acquired those capabilities and will vertically integrate them. This all sounds great, but we love numbers:

- Onum can handle 5x more events per second than the competition.

- Its ability to filter out useless data pre-storage cuts storage costs by up to 50% for customers. CRWD’s SIEM already has a storage cost edge; this will bolster it.

- Onum delivers 70% faster incident response than the competition.

- Onum also enables 40% lower data overhead per customer.

Onum did somewhere around $10M in revenue last year, so this purchase is small and is one that I fully support. Buying Humio to enter SIEM was a game-changer for this firm; I see Onum as another one. Great purchase.

- CRWD landed a global 2,000 communications platform for its SIEM product in a 7-figure deal.

Identity Security:

CrowdStrike added CrowdStrike Signal, bolstering its threat detection capabilities within the identity business. It’s similar to signature-based detection in that both build profiles of typical activity patterns to gain a better idea of what usage patterns should look like. On the other hand, signature-based uses already understood threats and what those looked like. It cannot use this information to learn, improve and create coverage for brand new threats like this can. Also in identity, it enhanced granular personalization for its operational threat intelligence product – shockingly thanks to AI. Finally, the PAM offering (already defined) it debuted last quarter is unsurprisingly off to a great start. They signed “leading global consulting firm” and displaced a legacy PAM in the process. If Palo Alto is right about identity security nearing an AI inflection point, CrowdStrike’s product suite is already positioned to benefit. No large M&A needed.

Cloud Security:

CrowdStrike landed a Fortune 500 energy company in a 7-figure deal. They allowed this company to turn “months of manual work into minutes” in a 10 product up-sell. Kurtz continues to see cloud-based hygiene tools as table stakes and the real value stemming from cloud workload protection (CWP; already defined). They view their runtime CWP product as best-in-class, without much competition. Hence the 35% scaled growth.

Partner News:

Added Falcon Cloud Security to Nvidia’s NIM microservices and NeMO offerings. The two just keep getting closer, following last quarter’s announcement that Falcon will be the cybersecurity standard for securing Nvidia hardware and software going forward. Hard to think of a more impactful stamp of approval. Maybe Amazon would be in that ballpark, and CRWD keeps getting closer with that tech giant too. The two companies debuted new agentic integrations and security tools available on AWS’s marketplace. It also added Falcon for AWS Security Incident Response. And in other Amazon news, Amazon Business Prime will use Falcon Go for small and medium businesses (SMBs). This unlocks companies with fewer than 100 employees that are simply too small for CRWD to directly pursue. Finally, it deepened its Red Canary partnership immediately after they were bought by Zscaler, pointing to a continued strong relationship between the two vendors. This entailed moving their entire EDR business to Falcon in a multi-million dollar deal.

h. Take

This quarter gave me everything that I wanted. A net new ARR acceleration ahead of schedule, with reiterated margin recovery timelines, fantastic cross-selling traction, more cash printing and higher competitive win rates. And I love the small acquisition a lot more than paying up for CyberArk. As I’ve said for a few quarters, the way this company handled the 2024 outage was admirable. They turned a bad blunder into an opportunity to create stickier customers with more modules and revenue. They showed the entire world how mission critical and irreplaceable they truly are. While Palo Alto may be bigger today, I see CRWD’s growth engine pushing it to the largest pure-play cybersecurity firm (by market cap and revenue) in the decades to come. Expensive? Yes. Deserves to be? Double yes. As we move into 2027, (like the team), I am exceedingly confident that growth will sharply reaccelerate, and margins will restart their consistent expansionary pattern. I’m eyeing $390 to add to my stake (70x forward FCF); we’ll see if I get that opportunity.

3. Nvidia (NVDA) Earnings Review

I think there are a few extra typos in this review. It’s been a very long week between the website debut and earnings season. I apologize if there’s any added sloppiness in here.

a. Nvidia 101

Nvidia designs semiconductors for data center, gaming and other use cases. It’s unanimously considered the technology leader in chips meant for accelerated compute and Generative AI (GenAI) use cases. While that’s where it specializes, it does a lot more. Its toolkit includes chips, servers, switches, networking and cutting edge software. Ownership of more of the GenAI infrastructure stack means opportunity for software-based product optimization and unique value creation. That’s so important.

It designs the entire next-gen data center layout with slick software integrations so customers can enjoy the best of accelerated compute. Nvidia calls these data centers “AI factories.”

The following items are important acronyms and definitions to know for this company:

Chips:

- GPU: Graphics Processing Unit. This is an electronic circuit used to process visual information and data.

- CPU: Central Processing Unit. This is a different type of electronic circuit that carries out tasks/assignments and data processing from applications. Teachers will often call this the “computer’s brain.”

- Black: Nvidia’s modern GPU architecture designed for accelerated compute and GenAI. Key piece of the DGX supercomputer platform. Rubin is the next platform after Blackwell. Then Feynmann.

- B100: Its Hopper 100 Chip. (B200 is Hopper 200).

- Ampere: The GPU architecture that Hopper replaces for a 16x performance boost.

- L40S: Another, more barebones GPU chipset based on Ada Lovelace architecture. This works best for less complex needs.

- Grace: Nvidia’s new CPU architecture that is designed for accelerated compute and GenAI.

- GB200: Its Grace Blackwell 200 Superchip with Nvidia GPUs and ARM Holdings tech.

Connectivity:

- Nvidia Link Switches: Designed to aggregate and connect (or scale up) Nvidia GPUs within one or a couple of server racks. This creates a sort of “mega-GPU.” GPU connections power greater efficiency, performance and computing scale (so cost advantages).

- The newest system allows for 576 total GPUs to be connected.

- InfiniBand: Standardized interconnectivity tech providing an ultra-low latency computing network. This can connect larger batches of server racks and compute clusters for more scalability.

- Nvidia Spectrum X: Similar to InfiniBand functionality and performance but Ethernet-based.

- Ethernet is vital for connecting larger compute clusters.

- This can connect 100,000 Hopper GPUs, like XAI did with its Colossus Supercomputer. Nvidia wants to soon push that to the millions.

NVLink Fusion allows companies to build “semi-custom” AI infrastructure with Nvidia and its integration ecosystem. GPUs are general-purpose in nature. They’re not granularly designed for every single niche use case like an Application-Specific Integrated Circuit (ASIC). This can help Nvidia capture more of that demand by pairing with Marvell and a few other partners and more easily emulate purpose-built hardware.

The Nvidia GB300 NVLink72 is its rack-scale computing system. Rack scale means the entire server rack powers computation rather than a single server. Because this includes Blackwell chips and NVLink switches, it’s partially in the compute bucket and partially in networking.

Software, Models & More:

- NeMo: Guided step-functions to build granular GenAI models for client-specific needs. It’s a standardized environment for model creation.

- Cuda: Nvidia-designed computing and program-writing platform purpose-built for Nvidia GPU optimizations. Cuda helps power things like Nvidia Inference Microservices (NIM), which guide the deployment of GenAI models (after NeMo helps build them).

- NIMs help “run Cuda everywhere” — in both on-premise and hosted cloud environments.

- GenAI Model Training: One of two key layers to model development. This seasons a model by feeding it specific data.

- GenAI Model Inference: The second key layer to model development. This pushes trained models to create new insights and uncover new, related patterns. It connects data dots that we didn’t realize were related. Training comes first. Inference comes second… third… fourth etc.

DGX: Nvidia’s full-stack platform combining its chipsets and software services.

b. Key Points

- $0 in H20 sales to China. Still strong Q/Q data center growth.

- Blackwell ramping on schedule; Rubin on a schedule for 2026 volume production.

- Busy quarter for European deals and partnerships.

- No China revenue is baked into forward guidance.

c. Demand

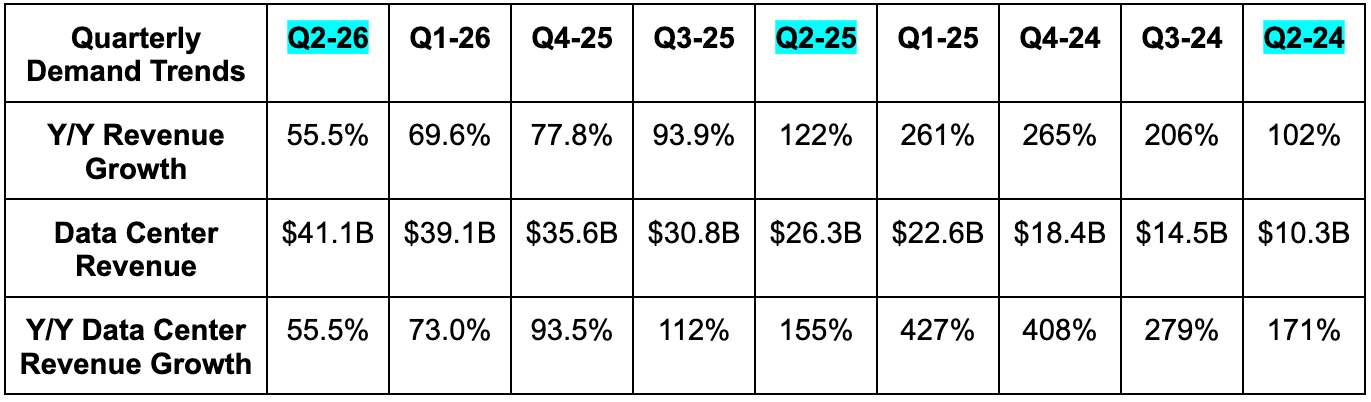

- Beat revenue estimates by 1.1% & beat guidance by 3.8%.

- Slightly missed data center revenue estimates.

- Data center growth would be 71% Y/Y if China demand restrictions were not put in place.

- Beat gaming revenue estimates by 12.4%.

- Beat professional visualization (Prof Vis) revenue estimates by 13.0%.

- Missed automotive revenue estimates by 1.1%.

Nvidia did not sell any H20 chips to China during the quarter. It did sell $650M of these lower power chips to other vendors. They’re waiting on new rules to be confirmed before shipments can potentially resume. A lot of moving pieces here. More on this in the guidance section.

China revenue is now just a low-single-digit % of its data center revenue. Singapore revenue was 22% of total this quarter, as U.S. clients continue to use that country for billing.

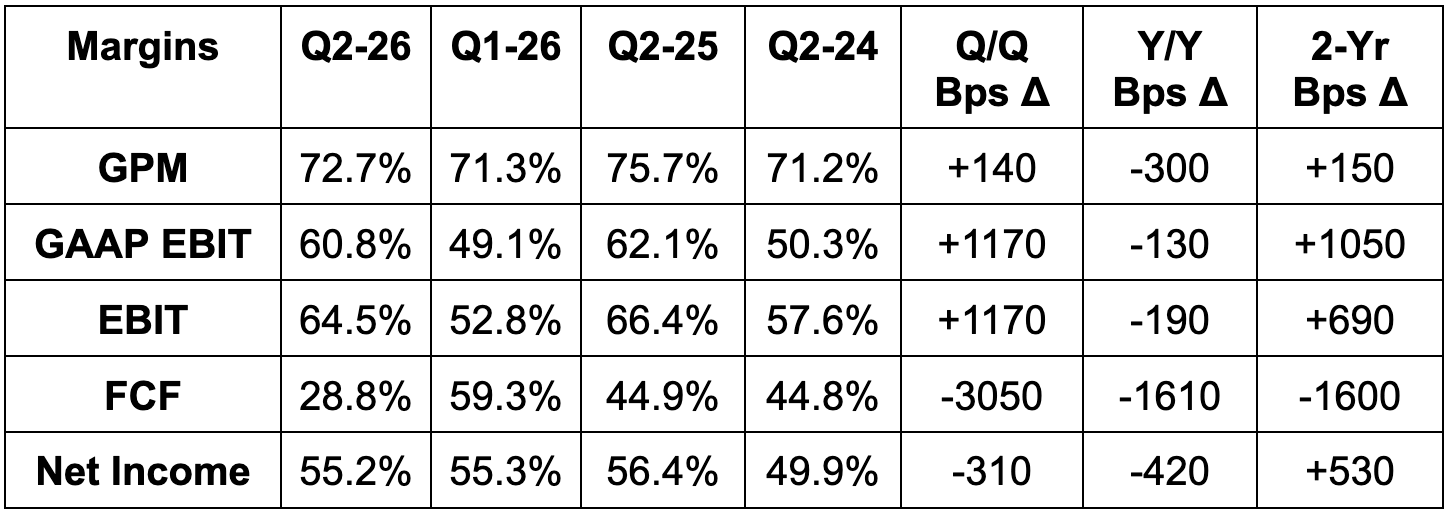

d. Profits & Margins

Q1-26 margins adjust for the China inventory headwind.

- Beat 72.1% GPM estimates by 60 basis points (bps; 1 basis point = 0.01%) & beat guidance by 70 bps.

- Without a $180M boost from H20 inventory releases (due to the $650M in sales to other non-China customers) GPM would have been 72.3% instead of 72.7%.

- Beat EBIT estimates by 2.7% & beat guidance by 6.3%.

- OpEx rose by 6% Y/Y due to higher compute and infrastructure costs. EBIT margin expansion was held back by GPM contraction. That contraction is related to Blackwell scaling, which is presently lower margin than Hopper.

- Beat $1.01 EPS estimate by $0.07 & beat guide by $0.10.

- $1.08 in EPS would have been $1.04 excluding the H20 inventory release.

e. Balance Sheet

- $56.8B in cash & equivalents.

- $8.5B in debt.

- Diluted shares fell by 1% Y/Y.

- Inventory jumped from $11B to $15B Q/Q to support Blackwell demand.

f. Guidance & Valuation

- Beat Q3 revenue estimates by 2.5%.

- Met Q3 GPM estimates.

- Beat Q3 EBIT estimates by 3.5%.

Notably, if licenses and regulations become more clear and they can resume shipments, they think they can ship around $3.5B to China. Notably, this was not included in guidance. That’s the right decision. We’ve seen some rumors out of China about their government telling companies not to buy these chips and more rumors about Nvidia pausing production as a result. Better to leave surprise to the upside.

The company still expects to exit this year with roughly a 75% gross margin. They think OpEx will rise by nearly 40% for the year, which is higher than previous 35% growth forecasts. Finally, they continue to expect $20B in sovereign AI revenue this year.

Nvidia trades for 30x forward EPS. EPS is expected to compound at a 42% clip for the next two years.

g. Call Highlights

Data Center – Blackwell & Rubin:

Blackwell revenue rose 17% Q/Q as Blackwell Ultra (GB300) enjoys “extraordinary demand and ramps shipments on schedule. This generated “tens of billions” in revenue, and was a seamless transition, considering how similar the core hardware is compared to GB200. This enabled them to reach a server rack production rate of 1,000 per week (GB300 server racks have 72 GPUs per unit).

Customers like CoreWeave are enjoying 10x inference performance and energy efficiency gains with GB300 vs. Hopper, which should keep demand humming. I would’ve loved to see them compare performance to the 2nd-newest Blackwell product instead of the somewhat dated Hopper platform, but that’s still impressive performance improvement nonetheless. The new Nvidia GB300 NVLink72 (which could be put in the networking section later in this piece) is what enables significant and incremental scale-up gains to pack more compute into each server rack and enhance overall efficiency. Please note that most of Blackwell-based revenue is tied to selling these racks, rather than standalone GPUs.

“Blackwell has set the benchmark, as it is the new standard for AI inference performance.” – CFO Colette Kress

Blackwell was the top-rated GPU platform across every MLPerf training benchmark. The team basically told us they expect Blackwell Ultra to top leaderboards when new rankings come out this month. That should be great to hear for bulls, as there have been some rumors swirling about AMD closing the technology gap.

Rubin is on track for volume production next year. And Nvidia is confident in maintaining an annual platform release cadence. The leader continues to sprint, which makes it very tough to catch them. Notably, AMD has also moved to an annual launch rhythm to match the GPU leader.

Datacenter – Runway:

The demand runway will continue to be based on a few things. First is Nvidia’s technology lead. If they remain ahead, that will obviously make finding buyers for whatever they can sell easier. Falling behind this year wouldn’t be the end of the world, considering how supply constrained their chips remain. They remain sold out of everything they can make and legally sell. But at the same time, losing their lead would certainly make commanding the lion’s share of the GPU opportunity in the years to come harder. The MLPerf ratings and expectations of vast performance gains with Rubin bode well for the lead being maintained. And $600B in 2025 CapEx from their large customers points to overall demand levels soaring for the market leader.

Second, the pace of hardware improvement also matters dearly. This ties to being the tech leader, but there’s more to it. If you’re improving things 100% per year instead of 5%, not only is your lead growing, but you’re amplifying a sense of urgency for customers to buy your products. If Microsoft starts buying Rubin chips that are vastly better than Blackwell, all other hyperscalers will be forced to follow suit to avoid being left behind. It’s a compelling domino effect that I’ve referenced in previous reviews. If the amount of Y/Y productivity gains slow, then the need to buy those brand new chips (at their sky-high price tags) will diminish to a certain extent. Things look quite good in this regard. Software-level optimizations from Cuda have delivered a 50x energy efficiency boost for Blackwell vs. Hopper, while a proprietary pre-training method for the newest Blackwell chip is boosting training speed by 7x.

The final piece is how much more compute new models consume vs. predecessors. And simply put, reasoning models need 100x-1000x the overall compute of first-generation models. So that looks good too.

Data Center – Networking Equipment:

Do not sleep on the importance of elite networking equipment. While GPUs get most of the attention, Nvidia’s ability to scale up with NVLink is what helps make its server racks perform better than the others. And? Its ability to connect more of these servers together (scale-out) matters dearly too. This can boost overall efficiency routinely by 10%-30% in data centers. That’s a massive edge in a competitive field. It does this scaling out through InfiniBand or the Ethernet-based version called SpectrumX. This quarter, it debuted SpectrumX-GS as a new model, which provides the “highest throughput and lowest latency network for Ethernet AI workloads.” Coreweave is an early user of this product, which helped drive 98% Y/Y networking growth to reach $7.3B.

- InfiniBand doubled revenue Q/Q thanks to 2x performance gains.

- It now has an InfiniBand product for quantum computing. Eventually that technology could potentially help with more meaningful scaling out.

More on Data Center:

Nvidia debuted its new datacenter servers (Nvidia RTX Pro 6000 Blackwell). These are already being used by 90 companies, as the product is purpose-built for use cases like running simulations, 3D rendering etc.

- NVLink Fusion is off to a great start. This offers semi-custom AI infrastructure, with more ability to tweak infrastructure for specific use cases and workloads. Fugaku Next (Japan) will use this product.

- Helped launch OpenAI’s new models with industry-leading performance running on Blackwell.

Software-Level Optimizations:

We very briefly glossed over Nvidia software leading to better GPU performance, but that deserves more attention. There’s a reason why AMD is working so hard on their ROCm software competitor. Nvidia has the luxury of having the largest base of developers in the space, and a library of software (aforementioned NIMs) that these people can easily tap into for their work. A lot of this work entails making GPUs better and better over time. That not only helps Nvidia stay ahead, but it’s also how Hopper revenue is still growing Q/Q. Those chips would be entirely antiquated if not for Cuda-based work extracting more performance out of them. And not only does this fortify its hardware moat, but it also provides more opportunity for cross-selling models, templates and apps to juice retention and customer stickiness.

Europe & Sovereign AI:

It was an encouragingly busy quarter in Europe, as it deepened partnerships with titans like Novo Nordisk and a few robotics companies in that region (more later). On the public sector side, Nvidia is also working with France, Germany, Italy and Spain to build an industrial AI cloud for European manufacturers. This EU news was joined by expansion of its supercomputer framework (DGX) to Europe. Maybe the regulatory environment there is getting slightly more favorable? All in all, the EU has more than $20B earmarked for data centers, with a lot of that expected to go to Nvidia. Nvidia Nemotron will also be used for sovereign AI optimization across the EU and Middle East.

Automotive & Robotics:

- Nvidia’s autonomous vehicle platform (DRIVE) was fully rolled out.

- Also debuted Nvidia Halos safety platform to guardrail and secure robotics development.

- It also rolled out an upgrade to its Blackwell computing platforms for automotive and robotics (Thor), which offers an “order of magnitude greater AI performance and energy efficiency” vs. the predecessor. Meta, Amazon Robotics and Boston Dynamics have all adopted Thor.

- 2M developers have now built 1K+ robotics applications on its platform. These consume a lot more compute than non-physical AI applications, providing another growth lever for the firm.

- Nvidia Omniverse with Cosmos (digital twin simulator for low-stakes testing) landed a larger expansion with Siemens. They rolled out new foundational models this quarter.

Gaming & AI Personal Computers:

- Added new GeForce RTX GPUs for gaming. This was its best gaming GPU launch ever.

- Blackwell will be added to GeForce next month.

- For AI PCs, the GeForce RTX desktop GPU doubles performance vs. the predecessor, as well as superior ray tracing and rendering.

Application Specific Integrated Circuit (ASIC) Competition:

Huang is not worried about ASIC competition from Broadcom. While that will take some of the overall demand for specific, non-general purpose workloads, they expect demand for GPUs to remain fantastic. That has a lot to do with leading performance and the integrated, server-rack-level systems it’s providing. These are very hard to emulate.

More on China:

It’s worth noting that China could be a $50B annual opportunity for Nvidia if it was allowed to freely sell whatever it wanted to (including Blackwell). That compares to $15B-$20B if it could freely sell just the H20 chips. They continue to talk with the current administration to advocate for the opening of that large market. They remain cautiously optimistic that they will eventually be allowed to sell Blackwell. The question is… will that be in 5 years when that tech is antiquated?

h. Take

55% Y/Y growth at this scale, while one of your largest markets remains shuttered and with a 60% GAAP EBIT margin is insane in the best of ways. That cannot be overstated. While the beats weren’t quite as large as we’ve grown used to and while the data center miss was surprising, I think that has more to do with difficult China modeling than anything else. When zooming out, all signs point to demand for the runway remaining long and this company leading the GPU race in the years to come. Rubin is on schedule (so those rumors were bogus), automotive, robotics and gaming are all rocking and the data center business continues to enjoy the most impressive multi-year demand ramp that I’ve ever seen. This wasn’t amazing compared to its last few quarters. But it was amazing compared to quite literally everyone else. I wouldn’t be surprised to see the stock take a breather following the headline data center number coming in a bit light, but I think the things that matter for bulls (hints of future AI demand runway) remain really good. That’s what matters most for the long-term investor.